An Information-Theoretic Perspective on Variance-Invariance-Covariance Regularization

R Shwartz-Ziv, R Balestriero, K Kawaguchi, T G. J. Rudner, Y LeCun

[New York University & Meta AI]

从信息论角度看方差-不变性-协方差正则化

要点:

-

将随机性假设迁移到神经网络输入,从信息论角度研究确定性网络; -

VICReg 的目标与信息论的量有关,并强调了目标的基本假设; -

提出一种连接VICReg、信息论和下游泛化的泛化界; -

提出信息论的自监督学习方法,并对其进行经验验证,以改善迁移学习。

一句话总结:

从信息论的角度阐述了用于自监督学习的方差-不变性-协方差正则化(VICReg),并提出了新的自监督学习方法,性能优于现有方法。

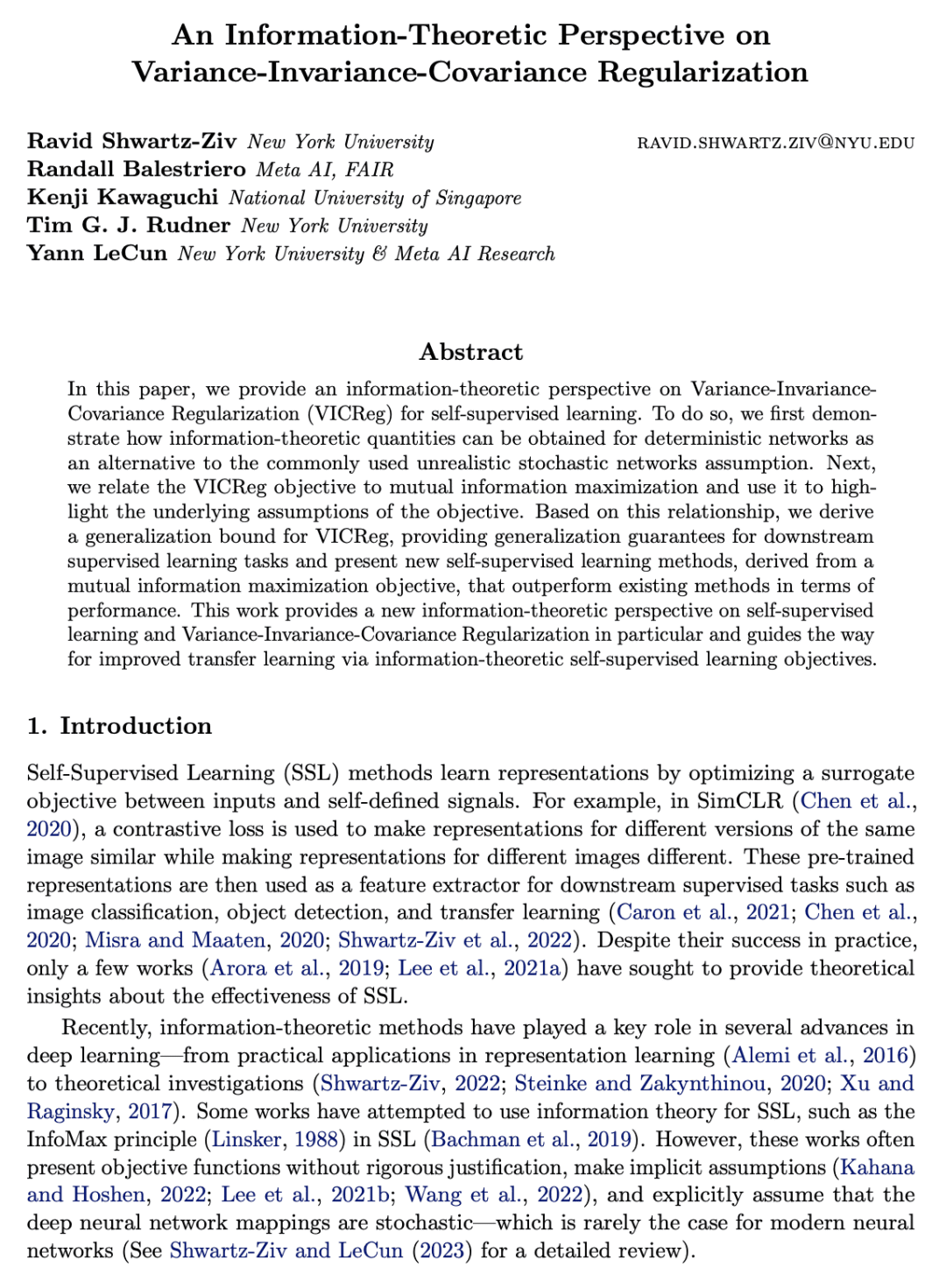

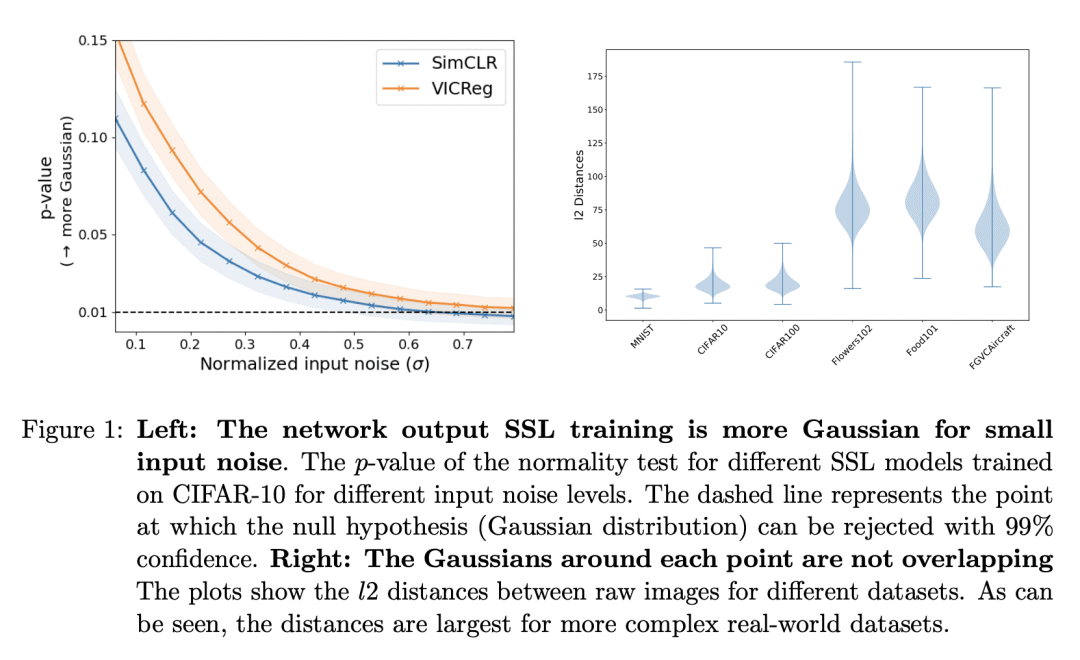

In this paper, we provide an information-theoretic perspective on Variance-Invariance-Covariance Regularization (VICReg) for self-supervised learning. To do so, we first demonstrate how information-theoretic quantities can be obtained for deterministic networks as an alternative to the commonly used unrealistic stochastic networks assumption. Next, we relate the VICReg objective to mutual information maximization and use it to highlight the underlying assumptions of the objective. Based on this relationship, we derive a generalization bound for VICReg, providing generalization guarantees for downstream supervised learning tasks and present new self-supervised learning methods, derived from a mutual information maximization objective, that outperform existing methods in terms of performance. This work provides a new information-theoretic perspective on self-supervised learning and Variance-Invariance-Covariance Regularization in particular and guides the way for improved transfer learning via information-theoretic self-supervised learning objectives.

论文链接:https://arxiv.org/abs/2303.00633

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢