StraIT: Non-autoregressive Generation with Stratified Image Transformer

S Qian, H Chang, Y Li, Z Zhang, J Jia, H Zhang

[Google Research]

StraIT: 基于分层图像Transformer的非自回归生成

要点:

-

StraIT 是一种分层建模框架,将视觉标记编码为具有涌现属性的分层,缓解了非自回归模型的建模难度; -

StraIT 在 ImageNet 基准上明显优于现有最先进自回归和扩散模型,同时实现了30倍的推理速度; -

StraIT 的解耦建模过程使其在应用上具有多样性,包括语义领域迁移; -

非自回归模型,如 StraIT,是未来生成式建模研究的一个有希望的方向。

一句话总结:

StraIT 是一种纯非自回归(NAR)生成模型,其类条件图像生成优于现有的自回归和扩散模型。

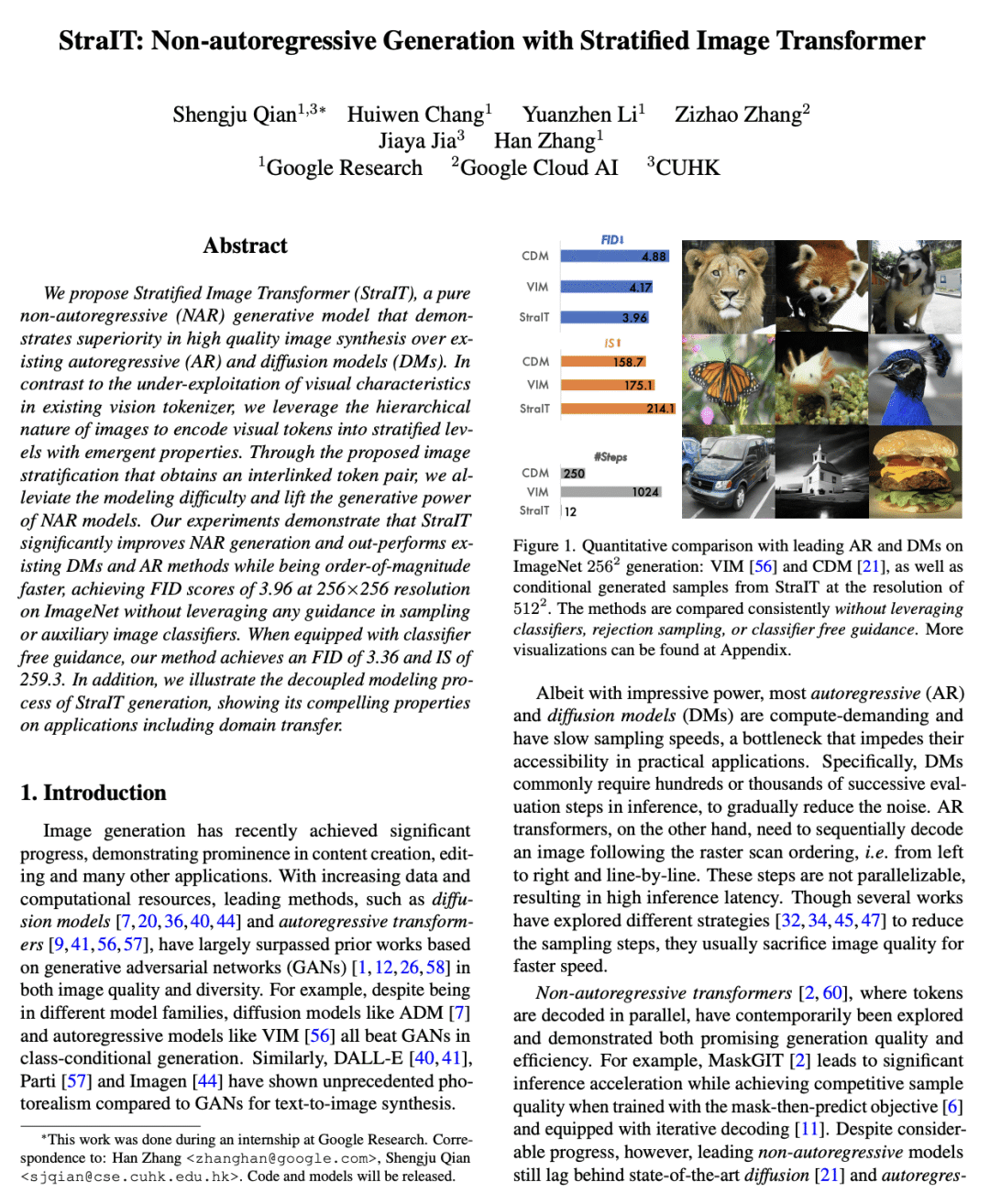

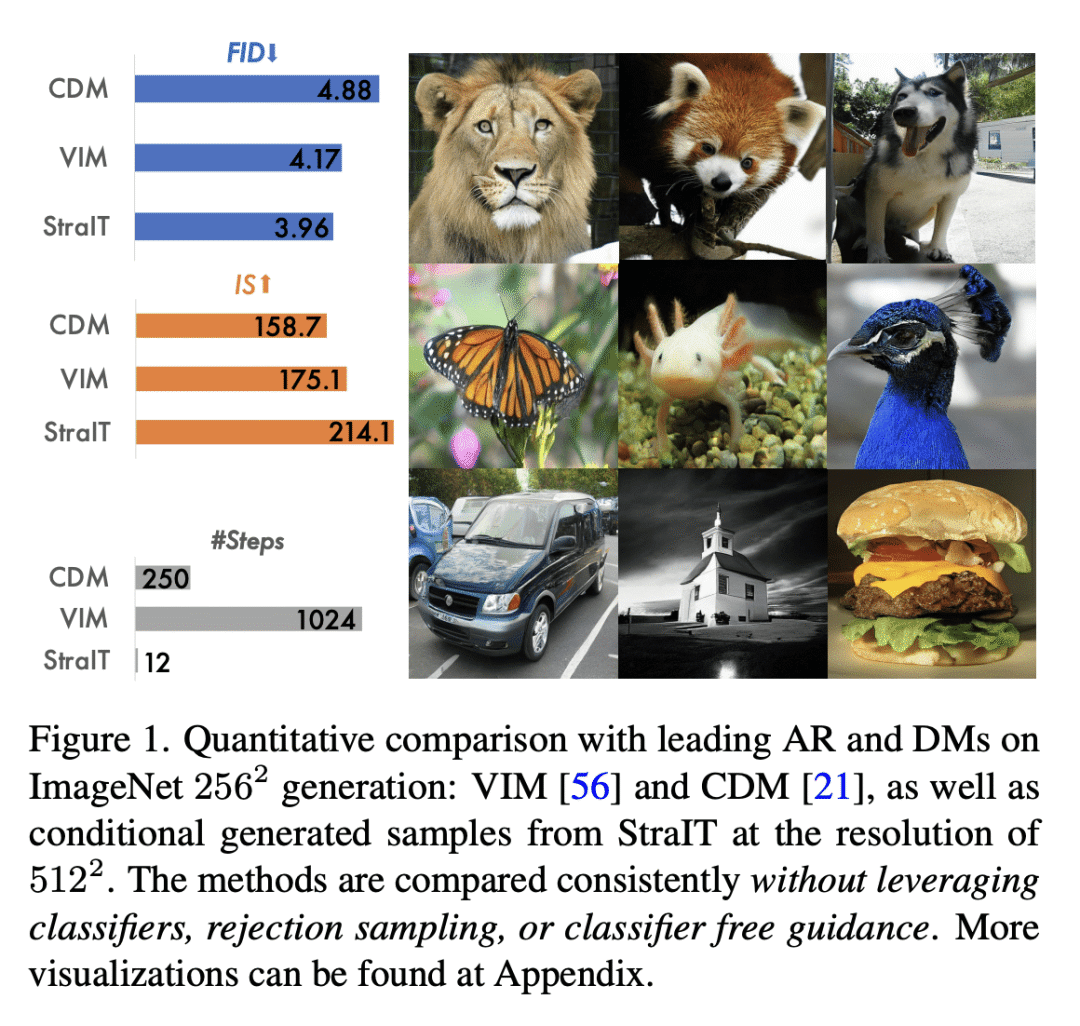

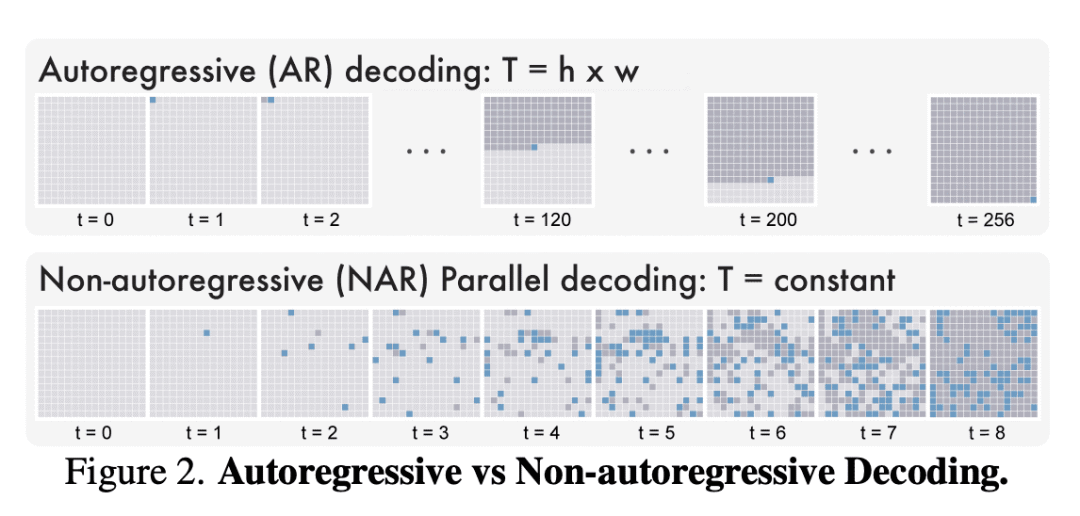

We propose Stratified Image Transformer(StraIT), a pure non-autoregressive(NAR) generative model that demonstrates superiority in high-quality image synthesis over existing autoregressive(AR) and diffusion models(DMs). In contrast to the under-exploitation of visual characteristics in existing vision tokenizer, we leverage the hierarchical nature of images to encode visual tokens into stratified levels with emergent properties. Through the proposed image stratification that obtains an interlinked token pair, we alleviate the modeling difficulty and lift the generative power of NAR models. Our experiments demonstrate that StraIT significantly improves NAR generation and out-performs existing DMs and AR methods while being order-of-magnitude faster, achieving FID scores of 3.96 at 256*256 resolution on ImageNet without leveraging any guidance in sampling or auxiliary image classifiers. When equipped with classifier-free guidance, our method achieves an FID of 3.36 and IS of 259.3. In addition, we illustrate the decoupled modeling process of StraIT generation, showing its compelling properties on applications including domain transfer.

论文链接:https://arxiv.org/abs/2303.00750

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢