Understanding plasticity in neural networks

C Lyle, Z Zheng, E Nikishin, B A Pires, R Pascanu, W Dabney

[DeepMind]

理解神经网络的可塑性

要点:

-

训练过程中,神经网络的可塑性损失与损失景观的曲率变化有着深刻的联系;

-

可塑性损失通常发生在缺少饱和单元或发散梯度范数的情况下;

-

参数化和优化设计的选择,如层规范化,可以在训练过程中更好地保护可塑性;

-

稳定损失景观对促进可塑性至关重要,并有许多附带的好处,如更好的泛化。

一句话总结:

研究了深度神经网络在训练过程中的可塑性损失,并确定了稳定损失景观对促进可塑性的重要性。

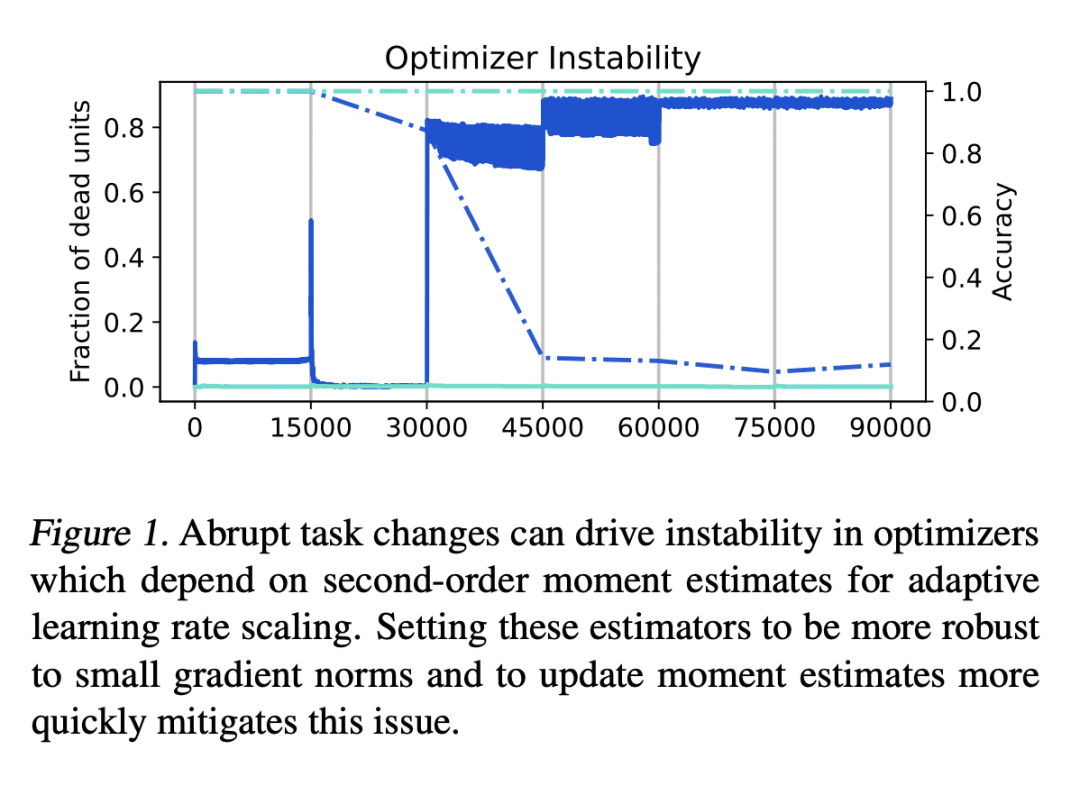

Plasticity, the ability of a neural network to quickly change its predictions in response to new information, is essential for the adaptability and robustness of deep reinforcement learning systems. Deep neural networks are known to lose plasticity over the course of training even in relatively simple learning problems, but the mechanisms driving this phenomenon are still poorly understood. This paper conducts a systematic empirical analysis into plasticity loss, with the goal of understanding the phenomenon mechanistically in order to guide the future development of targeted solutions. We find that loss of plasticity is deeply connected to changes in the curvature of the loss landscape, but that it typically occurs in the absence of saturated units or divergent gradient norms. Based on this insight, we identify a number of parameterization and optimization design choices which enable networks to better preserve plasticity over the course of training. We validate the utility of these findings in larger-scale learning problems by applying the best-performing intervention, layer normalization, to a deep RL agent trained on the Arcade Learning Environment.

https://arxiv.org/abs/2303.01486

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢