导语

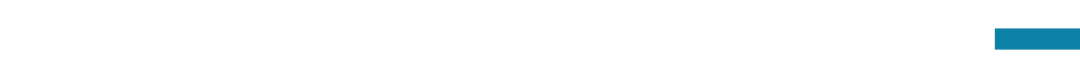

分享内容简介

分享内容简介

分享内容大纲

分享内容大纲

1.AI Alignment(AI对齐):

动机

可行方法概述:SFT, RLHF, DPO

2.SuperAlignment(超级对齐):

动机

研究类别:Weak-to-strong generalization, Interpretability, Scalable Oversight, Other directions

3.Scalable Oversight(可拓展监督):

动机

相关研究:实现方法(agent-debate, recursive reward modeling, prover-verifier game), 评估, 以及其他应用场景(agent, human annotation)

主要涉及到的前置知识

主要涉及到的前置知识

Alignment

SuperAlignment

主讲人介绍

主讲人介绍

涉及到的参考文献

涉及到的参考文献

[2] Bowman S R, Hyun J, Perez E, et al. Measuring progress on scalable oversight for large language models[J]. ar**v preprint ar**v:2211.03540, 2022.

[3] Irving G, Christiano P, Amodei D. AI safety via debate[J]. arXiv preprint arXiv:1805.00899, 2018.

[4] Leike J, Krueger D, Everitt T, et al. Scalable agent alignment via reward modeling: a research direction[J]. arXiv preprint arXiv:1811.07871, 2018.

[5] Learning to Give Checkable Answers with Prover-Verifier Games. arXiv.org, 2021, abs/2108.12099

[6] R. Peng, A. Parrish, N. Joshi, et al. QuALITY: Question Answering with Long Input Texts, Yes!. North American Chapter of the Association for Computational Linguistics, 2022: 5336-5358

[7] S. Chern, E. Chern, G. Neubig, et al. Can Large Language Models be Trusted for Evaluation? Scalable Meta-Evaluation of LLMs as Evaluators via Agent Debate. arXiv.org, 2024, arXiv:2401.16788

[8] Collin Burns, Pavel Izmailov, Jan Hendrik Kirchner, et al. Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. arXiv:2312.09390, 2023

[9] Long Ouyang, Jeff Wu, Xu Jiang, et al. Training language models to follow instructions with human feedback. arXiv:2203.02155, 2022

直播信息

直播信息

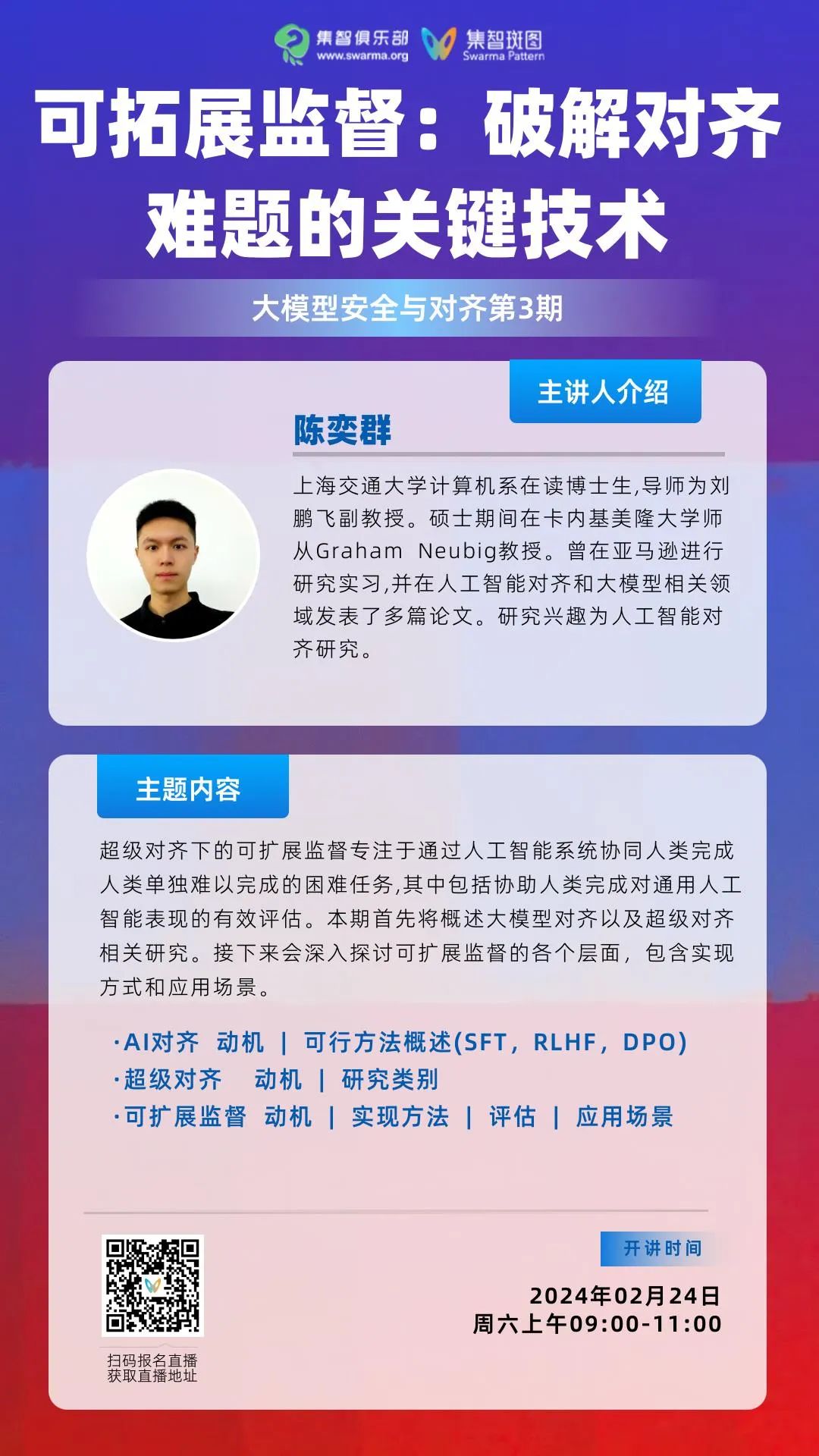

大模型安全与对齐读书会

大模型的狂飙突进唤醒了人们对AI技术的热情和憧憬,也引发了对AI技术本身存在的社会伦理风险及其对人类生存构成的潜在威胁的普遍担忧。在此背景下,AI安全与对齐得到广泛关注,这是一个致力于让AI造福人类,避免AI模型失控或被滥用而导致灾难性后果的研究方向。集智俱乐部和安远AI联合举办「大模型安全与对齐」读书会,由多位海内外一线研究者联合发起,旨在深入探讨大模型安全与对齐所涉及的核心技术、理论架构、解决路径以及安全治理等交叉课题。

点击“阅读原文”,报名读书会

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢