新智元报道

新智元报道

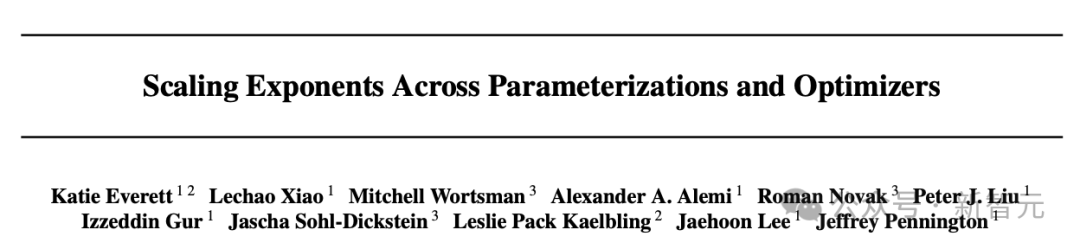

【新智元导读】DeepMind最近被ICML 2024接收的一篇论文,完完全全暴露了他们背靠谷歌的「豪横」。一篇文章预估了这项研究所需的算力和成本,大概是Llama 3预训练的15%,耗费资金可达12.9M美元。

论文地址:https://arxiv.org/abs/2407.05872

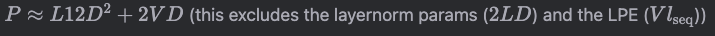

Transformer架构信息

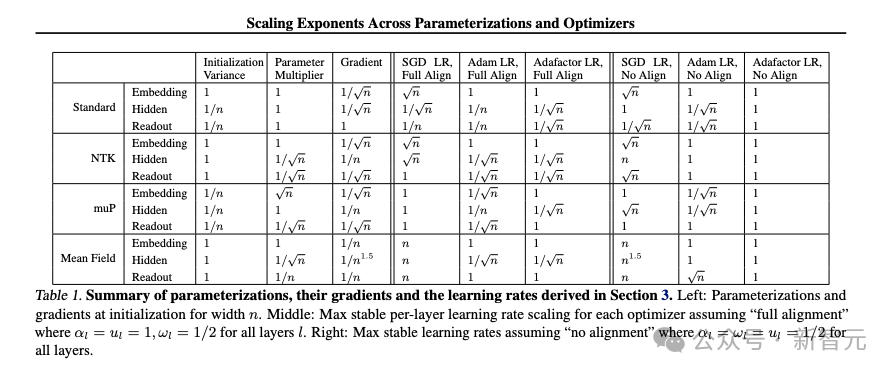

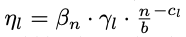

论文附录C提供了关于模型算法和架构的各种细节设置,比如使用decoder-only架构、层归一化、GeLU激活函数、无dropout、T5分词器、批大小为256、用FSDP并行等等。

def M(d: int, L=8, l_seq=512, V=32101) -> int:

return 6*d * (L*(12*d + l_seq) + V)

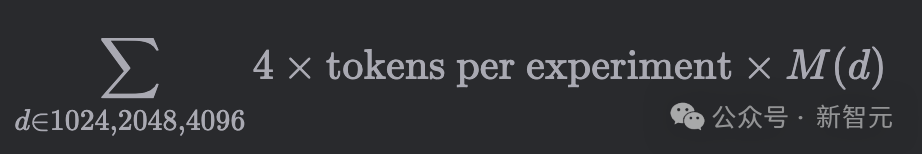

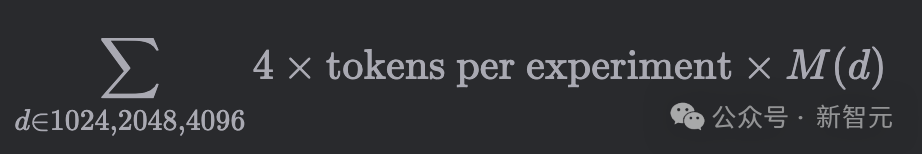

TPE = 50000 * 256 * 512对齐实验

假设对齐实验中,直接使用了后面的学习率扫描得出的最优结果,并没有单独进行学习率扫描,因此这一步的成本计算比较简单:

def alignment() -> int:

return 4 * TPE * sum(M(d) for d in [1024,2048,4096])

# >>> f'{alignment():.3E}'

# '3.733E+20'

# >>> cost_of_run(alignment())[0]

# 888.81395400704

如果H100每运行1小时的花费以3美元计算,对齐实验的成本大致为888美元。 学习率

def alignment() -> int:

return 4 * TPE * sum(M(d) for d in [1024,2048,4096])

# >>> f'{alignment():.3E}'

# '3.733E+20'

# >>> cost_of_run(alignment())[0]

# 888.81395400704学习率

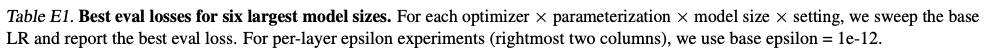

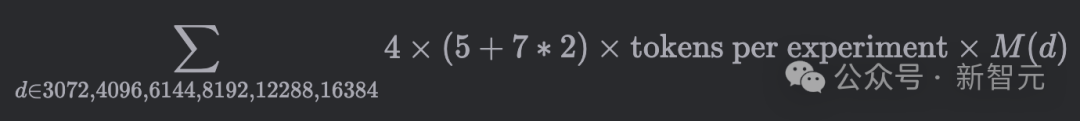

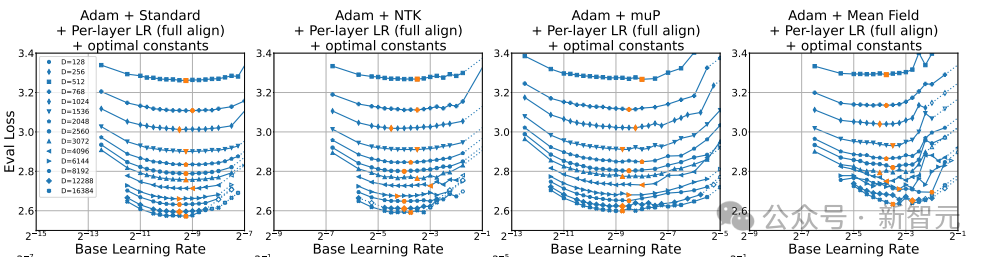

子问题:最佳评估损失(eval loss)实验

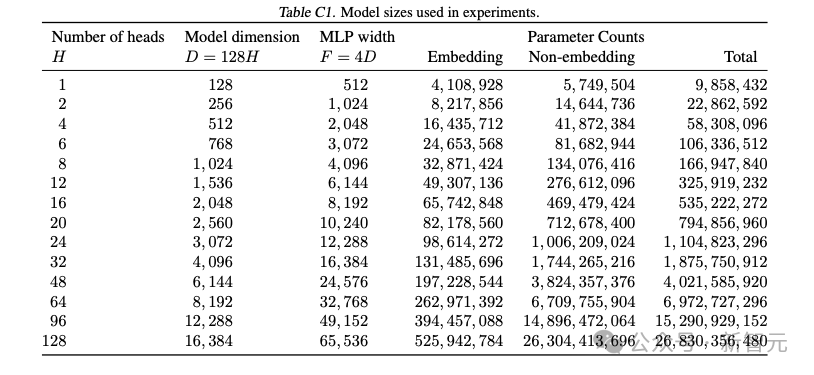

H = [1,2,4,6,8,12,16,20,24,32,48,64,96,128]

D = [h * 128 for h in H]

def table_e1() -> int:

sets_x_optims = 5 + 7 + 7

return 4 * sets_x_optims * TPE * sum(M(d) for d in D[-6:])

# >>> f'{table_e1():.3E}';cost_of_run(table_e1())

# '1.634E+23'

# (388955.9991064986, 16206.499962770775)

β参数

def beta_only() -> int:

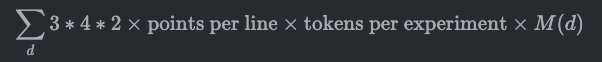

return 3*4*2*PpL * TPE * sum(M(d) for d in D)

# 7.988E+23 (1902022.3291813303, 79250.93038255542)γ参数

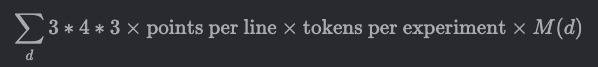

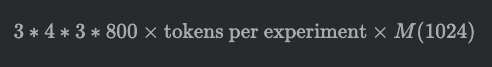

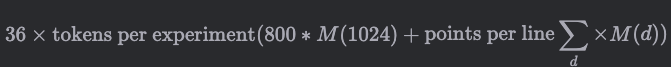

def gamma_expts() -> int:

return 36*TPE * (800*M(1024) + PpL*sum(M(d) for d in D))

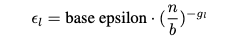

# gamma_expts 1.354E+24 (3224397.534237257, 134349.8972598857)Adam优化器的Epsilon参数

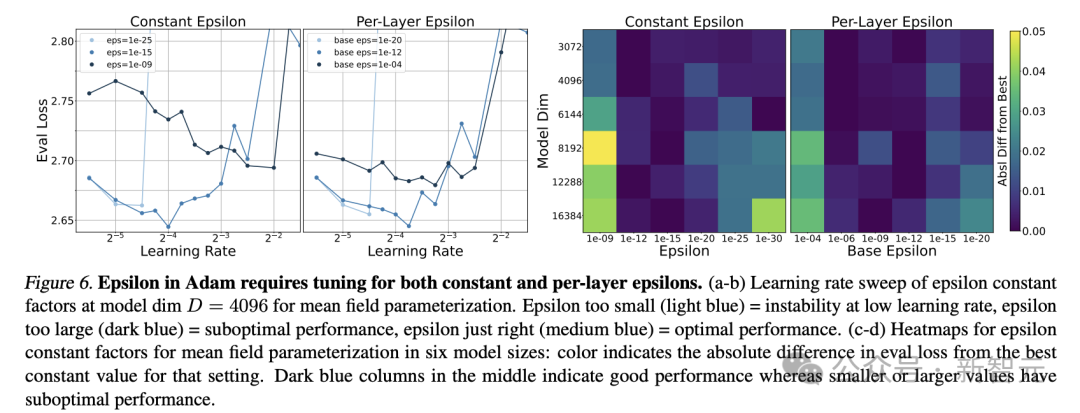

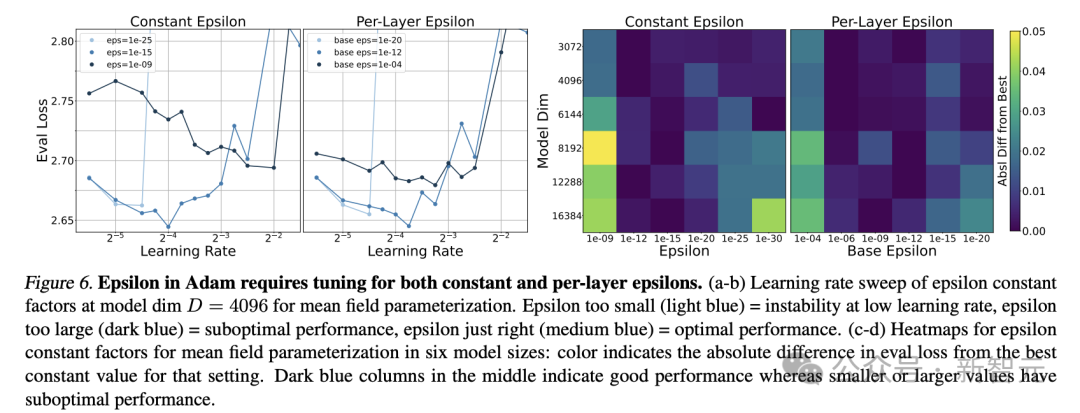

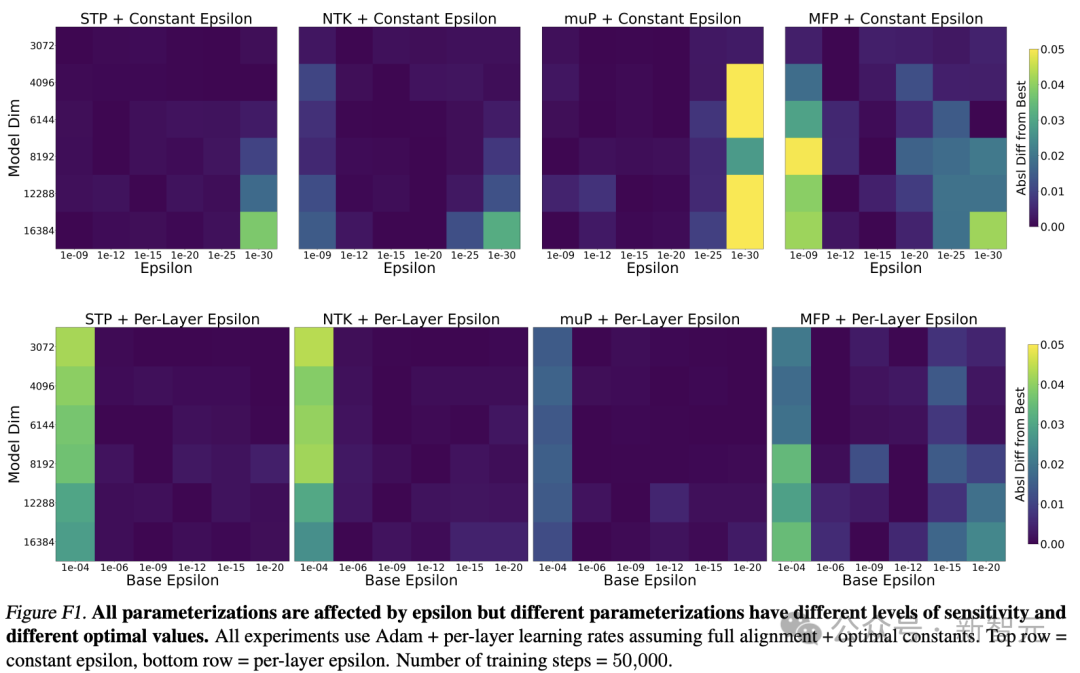

论文4.3节所述的Epsilon参数实验是计算量的大头。

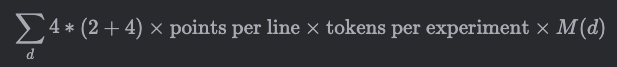

根据上面的推断,每次找到最佳评估损失时都尝试过15个不同的学习率(points per line),那么图6所示的epsilon参数变化图耗费的计算量为:

计算结果透露出一种简洁的昂贵,也就是200万美元的账单而已。 PpL = 15 # unprincipled estimate

def eps_variants() -> int:

return 4 * 6 * PpL * TPE * sum(M(d) for d in D)

'''

>>> f'{eps_variants():.3E}';cost_of_run(eps_variants())

'7.988E+23'

(1902022.3291813303, 79250.93038255542)

'''

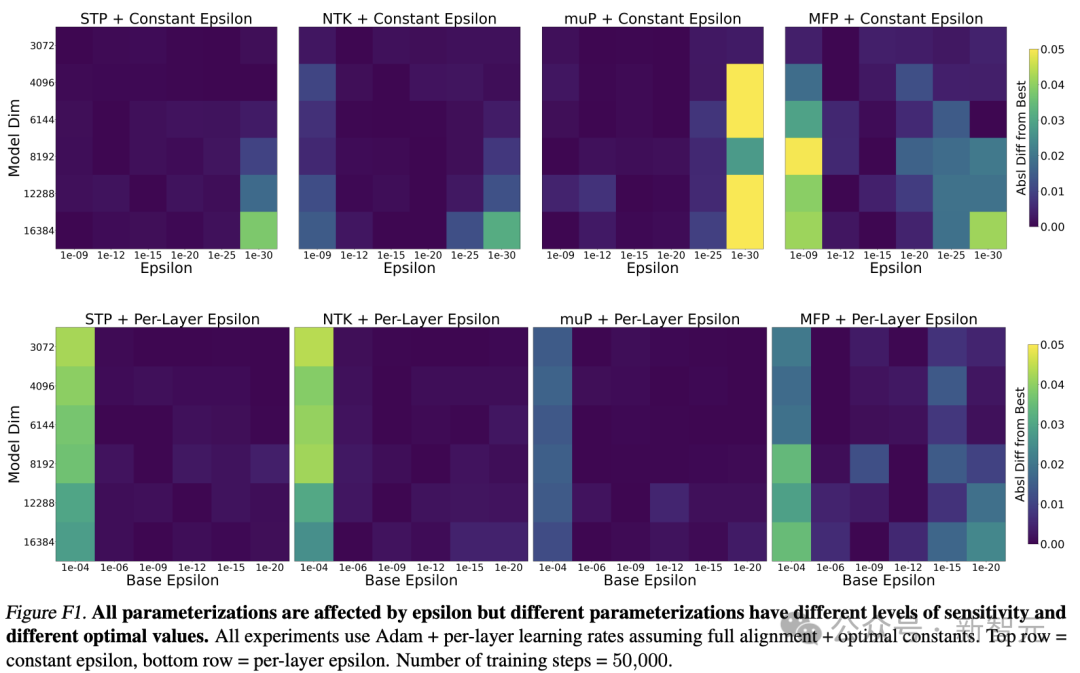

除了图6左侧的折线图,还有附录F热力图的结果。

假设每个方块值都是经过13次学习率扫描后得到的结果,这部分计算量则为:

结果发现,仅仅要得到这8张热力图,成本就是320万美元。而且,由于我们将LR扫描数量建模为常数13,这个数字可能低于实际成本。 def eps_heatmaps() -> int:

# eps-type * eps-val * parameterizations * LR range * ...

return 2 * 6 * 4 * 13 * TPE * sum(M(d) for d in D[-6:])

'''

>>> f'{eps_heatmaps():.3E}';cost_of_run(eps_heatmaps())

'1.341E+24'

(3193533.466348094, 133063.89443117057)

'''

权重衰减

PpL = 15 # unprincipled estimate

def eps_variants() -> int:

return 4 * 6 * PpL * TPE * sum(M(d) for d in D)

'''

>>> f'{eps_variants():.3E}';cost_of_run(eps_variants())

'7.988E+23'

(1902022.3291813303, 79250.93038255542)

'''

def eps_heatmaps() -> int:

# eps-type * eps-val * parameterizations * LR range * ...

return 2 * 6 * 4 * 13 * TPE * sum(M(d) for d in D[-6:])

'''

>>> f'{eps_heatmaps():.3E}';cost_of_run(eps_heatmaps())

'1.341E+24'

(3193533.466348094, 133063.89443117057)

'''权重衰减

权重衰减实验(附录G)比较好理解,对4×参数化方案以及所有参数进行一次基本的LR扫描:

比epsilon实验便宜不少,也就是湾区工程师一年的工资——31.7万美元。 def weight_decay() -> int:

return 4 * PpL * TPE * sum(M(d) for d in D)

'''

>>> f'{weight_decay():.3E}'; cost_of_run(weight_decay())

'1.331E+23'

(317003.7215302217, 13208.488397092571)

'''

Adafactor优化器

def weight_decay() -> int:

return 4 * PpL * TPE * sum(M(d) for d in D)

'''

>>> f'{weight_decay():.3E}'; cost_of_run(weight_decay())

'1.331E+23'

(317003.7215302217, 13208.488397092571)

'''Adafactor优化器

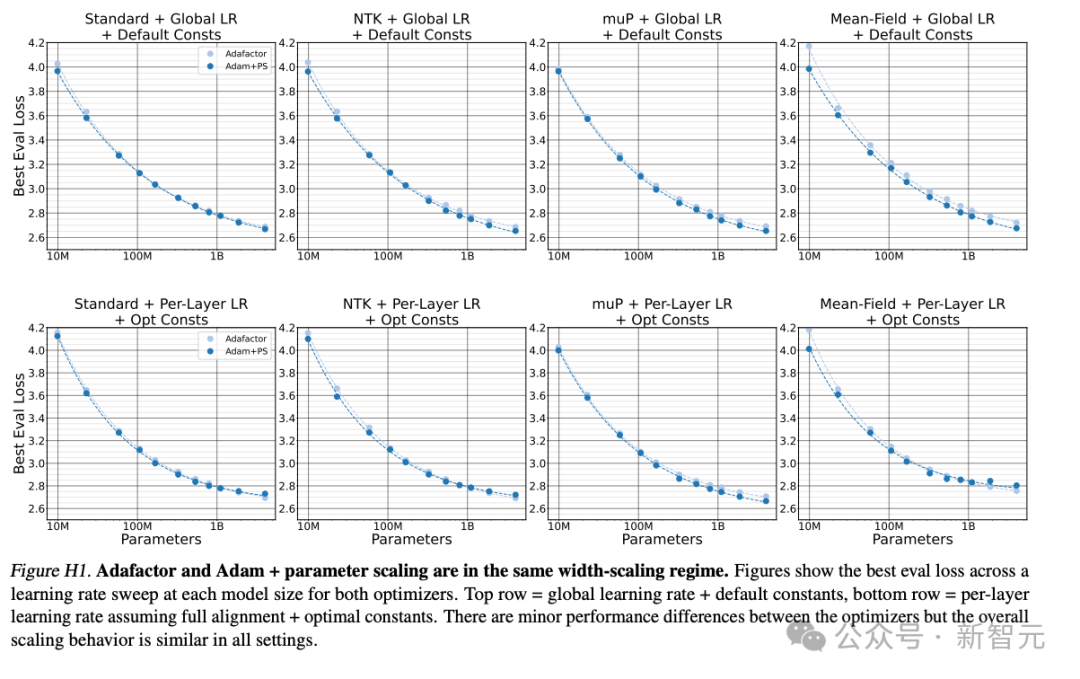

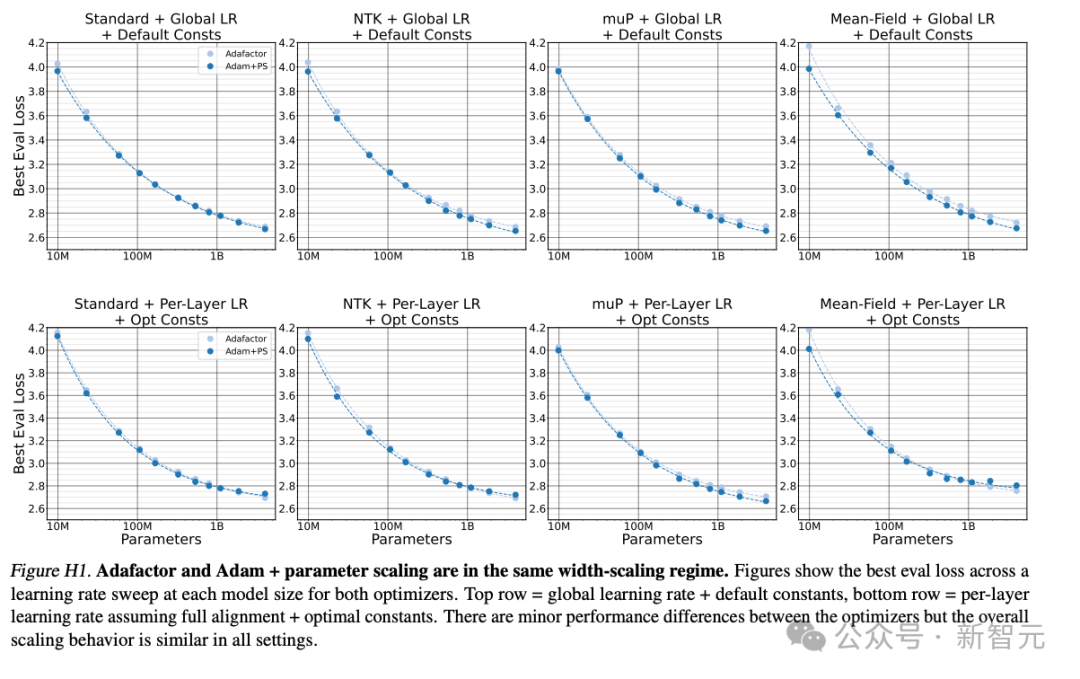

这部分实验在附录C3中有详细描述,是为了检验Adafactor和Adam+parameter scaling是否有相似的宽度缩放机制。

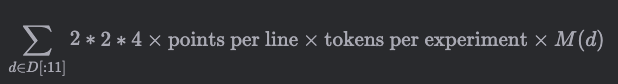

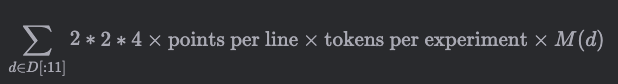

共有2×4张图,其中每个优化器收集11个数据点,因此计算公式为:

账单上再加18.8万美元。 def adafactor() -> int:

return 2*2*4*PpL*TPE*sum(M(d) for d in D[:11])

'''

>>> f'{adafactor():.3E}'; cost_of_run(adafactor())

'7.918E+22'

(188532.80765144504, 7855.533652143543)

'''

计算最优化

def adafactor() -> int:

return 2*2*4*PpL*TPE*sum(M(d) for d in D[:11])

'''

>>> f'{adafactor():.3E}'; cost_of_run(adafactor())

'7.918E+22'

(188532.80765144504, 7855.533652143543)

'''计算最优化

论文尝试改变注意力头H的数量,希望找到计算最优化的设置,但其中涉及步长和数据集的改变,因此这部分不使用公式描述,计算代码如下: def P(d: int, L=8, V=32101) -> int:

return 2 * d * (6*L*d + V)

def compute_optimal():

indices_50k = (14, 14, 12)

return 4*PpL*sum([

TPE * sum(sum( M(d) for d in D[:i] ) for i in indices_50k),

20 * sum(P(d)*M(d) for d in D[:11]) *3,

])

# compute_optim 7.518E+23 (1790104.1799513847, 74587.67416464102)

总结

def P(d: int, L=8, V=32101) -> int:

return 2 * d * (6*L*d + V)

def compute_optimal():

indices_50k = (14, 14, 12)

return 4*PpL*sum([

TPE * sum(sum( M(d) for d in D[:i] ) for i in indices_50k),

20 * sum(P(d)*M(d) for d in D[:11]) *3,

])

# compute_optim 7.518E+23 (1790104.1799513847, 74587.67416464102)总结

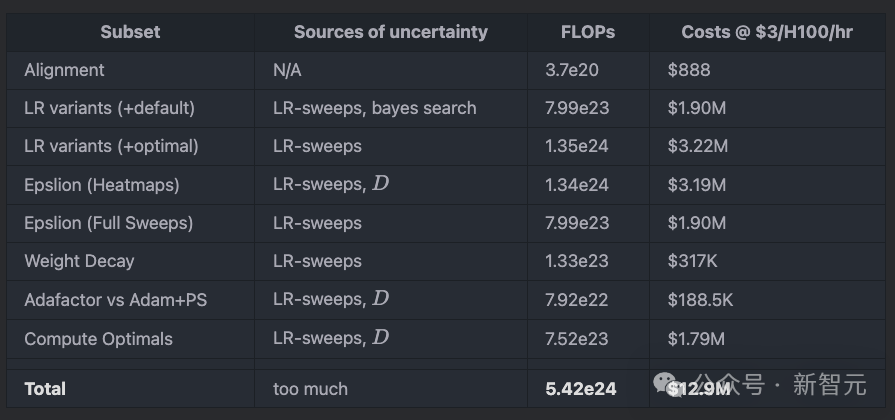

将以上各部分实验的算力和成本汇总在一起: alignment 3.733E+20 (888.81395400704, 37.033914750293334)

table_e1 1.634E+23 (388955.9991064986, 16206.499962770775)

eps_variants 7.988E+23 (1902022.3291813303, 79250.93038255542)

eps_heatmaps 1.341E+24 (3193533.466348094, 133063.89443117057)

beta_only 7.988E+23 (1902022.3291813303, 79250.93038255542)

gamma_expts 1.354E+24 (3224397.534237257, 134349.8972598857)

weight_decay 1.331E+23 (317003.7215302217, 13208.488397092571)

adafactor 7.918E+22 (188532.80765144504, 7855.533652143543)

compute_optim 7.518E+23 (1790104.1799513847, 74587.67416464102)

结果发现,整篇论文的运算量为5.42e24 FLOPS。 这个数字仅仅是Llama 3训练计算量的15%,如果在10万卡H100集群上运行,只需要2天时间即可完成所有实验。

total_flops=5.421E+24

rental price: US$12.9M

h100 node months required: 746.9595590938408

(sanity check) D=[128, 256, 512, 768, 1024, 1536, 2048, 2560, 3072, 4096, 6144, 8192, 12288, 16384]

(sanity check) model sizes: ['0.00979B', '0.0227B', '0.058B', '0.106B', '0.166B', '0.325B', '0.534B', '0.794B', '1.1B', '1.87B', '4.02B', '6.97B', '15.3B', '26.8B']

(sanity check) M/6P: ['63.4%', '68.5%', '75.3%', '79.7%', '82.8%', '86.8%', '89.3%', '91.0%', '92.2%', '93.9%', '95.7%', '96.7%', '97.7%', '98.3%']

然而,如果不从LLM预训练的标准来衡量,仅把DeepMind的这篇论文看做一篇学术研究,这个计算量就显得相当奢侈了。 如果实验室仅有10张H100,就根本不可能进行这个量级的研究。 有100张H100的大型实验室,或许能用几年时间跑完以上所有实验。

alignment 3.733E+20 (888.81395400704, 37.033914750293334)

table_e1 1.634E+23 (388955.9991064986, 16206.499962770775)

eps_variants 7.988E+23 (1902022.3291813303, 79250.93038255542)

eps_heatmaps 1.341E+24 (3193533.466348094, 133063.89443117057)

beta_only 7.988E+23 (1902022.3291813303, 79250.93038255542)

gamma_expts 1.354E+24 (3224397.534237257, 134349.8972598857)

weight_decay 1.331E+23 (317003.7215302217, 13208.488397092571)

adafactor 7.918E+22 (188532.80765144504, 7855.533652143543)

compute_optim 7.518E+23 (1790104.1799513847, 74587.67416464102)total_flops=5.421E+24

rental price: US$12.9M

h100 node months required: 746.9595590938408

(sanity check) D=[128, 256, 512, 768, 1024, 1536, 2048, 2560, 3072, 4096, 6144, 8192, 12288, 16384]

(sanity check) model sizes: ['0.00979B', '0.0227B', '0.058B', '0.106B', '0.166B', '0.325B', '0.534B', '0.794B', '1.1B', '1.87B', '4.02B', '6.97B', '15.3B', '26.8B']

(sanity check) M/6P: ['63.4%', '68.5%', '75.3%', '79.7%', '82.8%', '86.8%', '89.3%', '91.0%', '92.2%', '93.9%', '95.7%', '96.7%', '97.7%', '98.3%']参考资料:

https://152334h.github.io/blog/scaling-exponents/

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢