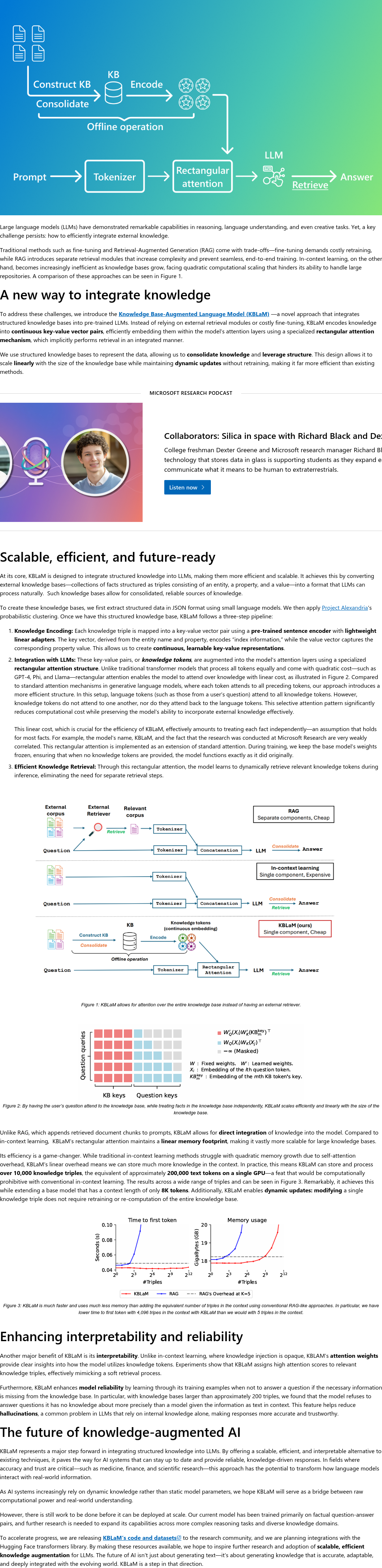

Large language models (LLMs) excel in reasoning, language understanding, and creative tasks but face challenges in efficiently integrating external knowledge. Traditional methods like fine-tuning require expensive retraining, while Retrieval-Augmented Generation (RAG) adds complexity with separate retrieval modules, disrupting end-to-end training. In-context learning becomes inefficient for large knowledge bases due to quadratic computational scaling. To overcome these limitations, the Knowledge Base-Augmented Language Model (KBLaM) is introduced. KBLaM integrates structured knowledge bases into pre-trained LLMs without relying on external retrieval modules or extensive fine-tuning. This approach aims to streamline knowledge integration, reduce computational overhead, and maintain the model's performance and adaptability. By addressing the inefficiencies of prior methods, KBLaM offers a more scalable and effective solution for incorporating external knowledge into LLMs.

本专栏通过快照技术转载,仅保留核心内容

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢