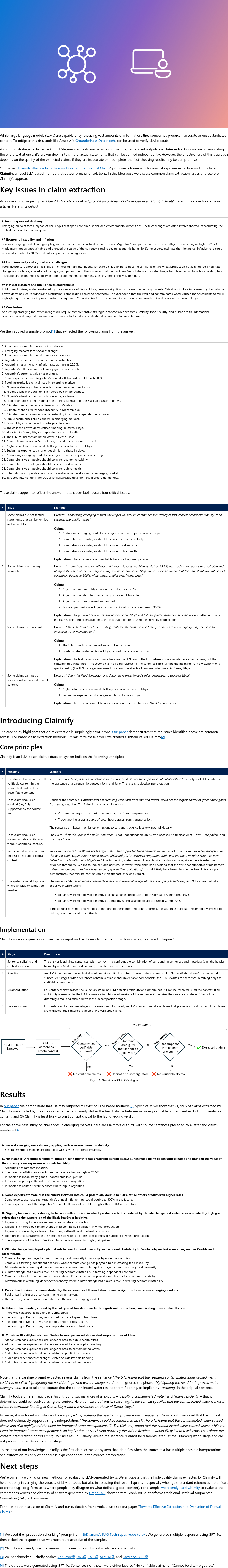

Large language models (LLMs) can generate extensive information but sometimes produce inaccurate content. To address this, tools like Azure AI’s Groundedness Detection help verify LLM outputs. A key fact-checking strategy is claim extraction, which involves breaking down complex texts into simple factual statements for independent verification. However, the quality of extracted claims significantly impacts the effectiveness of fact-checking; inaccurate or incomplete claims can compromise results. The paper "Towards Effective Extraction and Evaluation of Factual Claims" presents a framework to evaluate claim extraction methods and introduces Claimify, an LLM-based approach that surpasses previous solutions. This blog post examines common issues in claim extraction and explores ways to improve its accuracy and reliability, ensuring more effective fact-checking of LLM-generated content.

本专栏通过快照技术转载,仅保留核心内容

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢