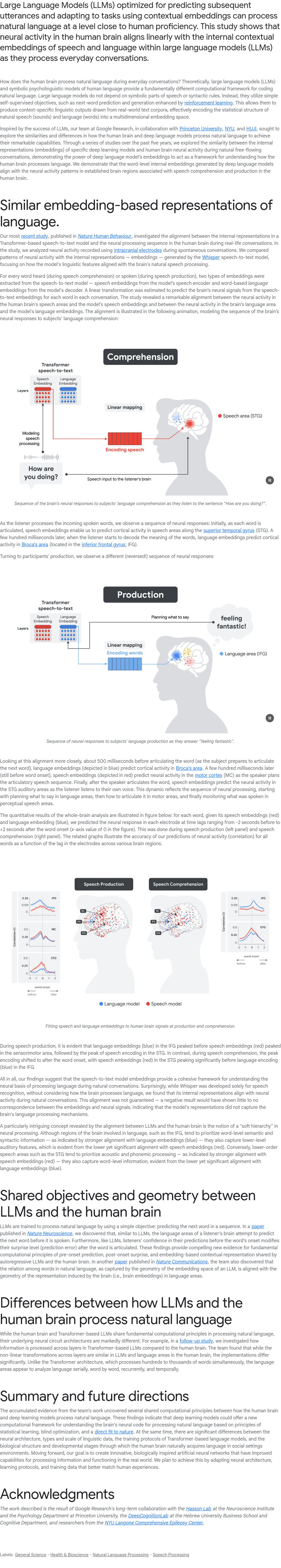

Large Language Models (LLMs) are optimized for predicting subsequent utterances and adapting to tasks via contextual embeddings, achieving near-human proficiency in natural language processing. This study reveals that neural activity in the human brain shows linear alignment with the internal contextual embeddings of LLMs during everyday conversations. While LLMs and symbolic psycholinguistic models offer differing computational frameworks for coding natural language, LLMs operate without relying on symbolic parts of speech or syntactic rules. Instead, they use self-supervised objectives like next-word prediction and reinforcement learning-enhanced generation. By drawing from real-world text corpora, LLMs generate context-specific linguistic outputs, demonstrating their ability to mimic human-like language processing. This research bridges the gap between artificial and human language processing, offering insights into how the brain handles natural language in daily conversations.

本专栏通过快照技术转载,仅保留核心内容

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢