1、[RO] Isaac Gym: High Performance GPU-Based Physics Simulation For Robot Learning

V Makoviychuk, L Wawrzyniak, Y Guo, M Lu, K Storey, M Macklin, D Hoeller, N Rudin, A Allshire, A Handa, G State

[NVIDIA]

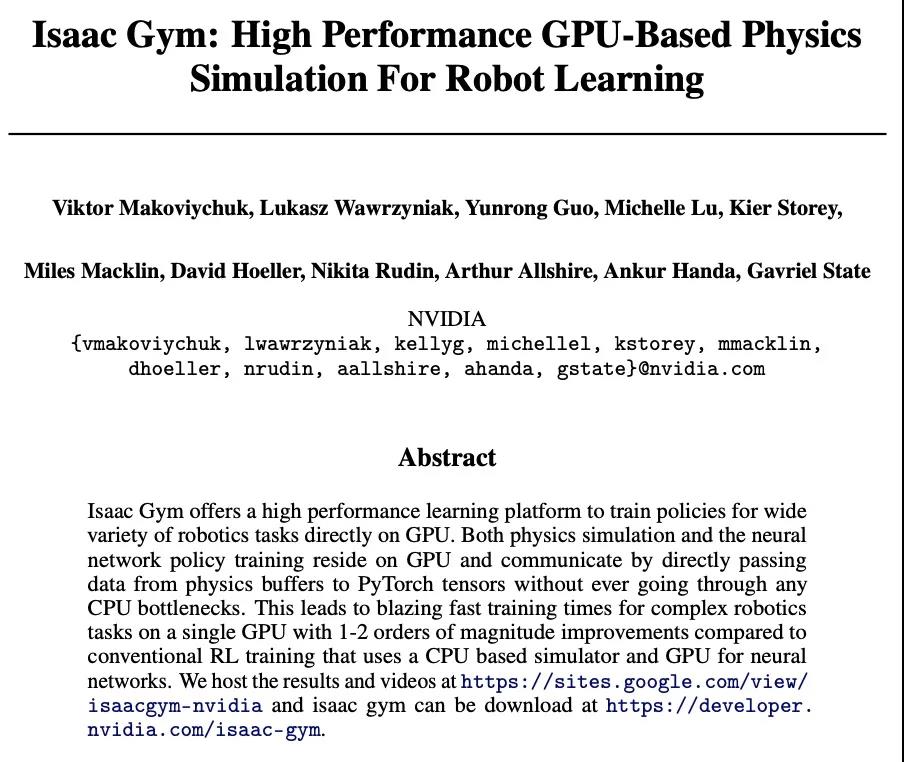

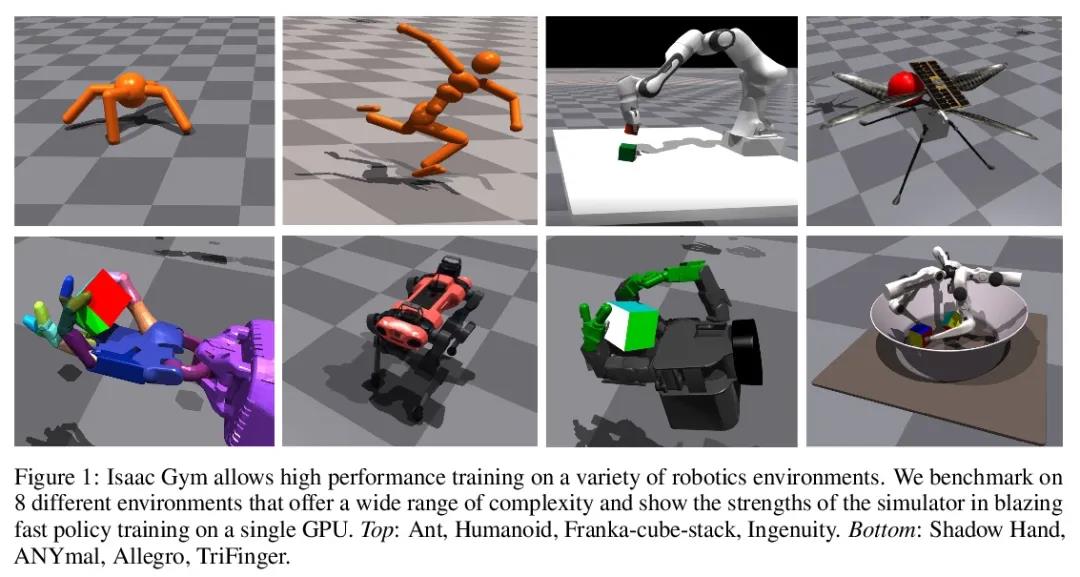

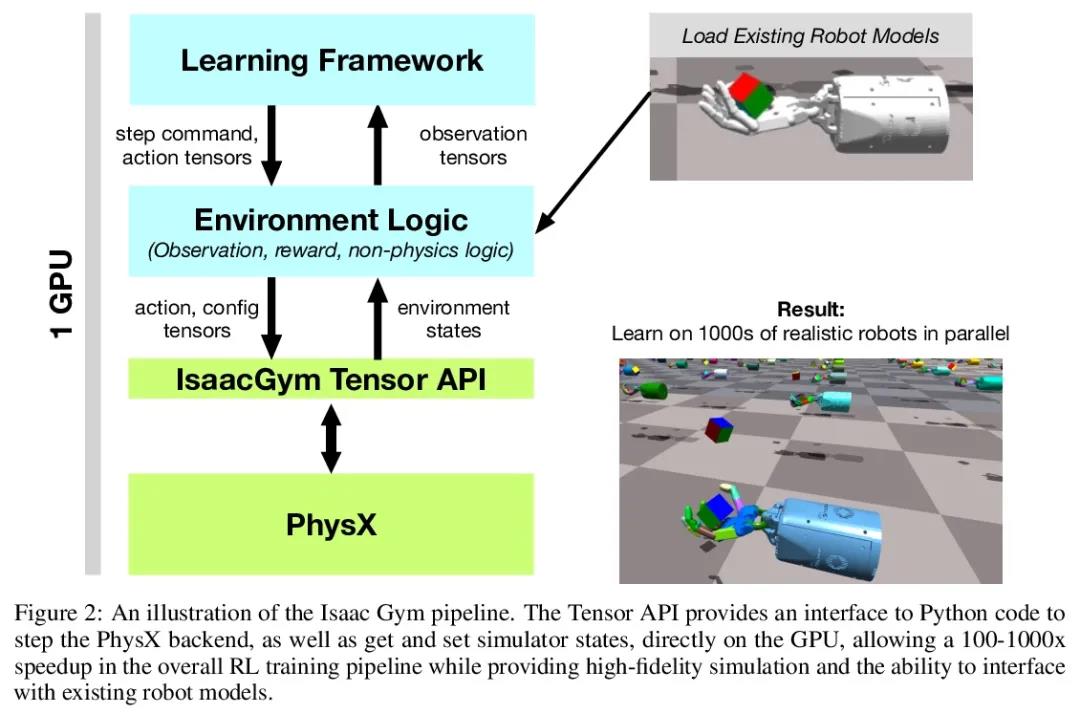

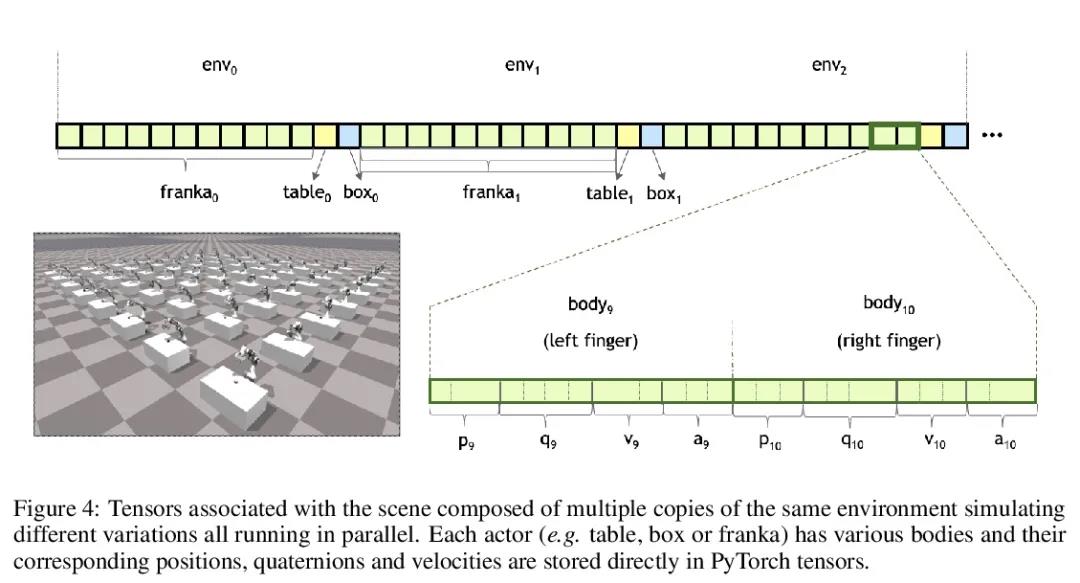

Isaac Gym: 基于GPU的高性能机器人学习物理仿真。Isaac Gym提供了一个高性能的学习平台,可以直接在GPU上为各种机器人任务训练策略。物理仿真和神经网络策略训练都在GPU上进行,通过直接将数据从物理缓冲区传递到PyTorch张量进行通信,不需要经过任何CPU瓶颈。复杂机器人任务在单个GPU上的训练时间快得惊人,与使用基于CPU的仿真器和GPU的神经网络的传统强化学习训练相比,有1-2个数量级的改进。

Isaac Gym offers a high performance learning platform to train policies for wide variety of robotics tasks directly on GPU. Both physics simulation and the neural network policy training reside on GPU and communicate by directly passing data from physics buffers to PyTorch tensors without ever going through any CPU bottlenecks. This leads to blazing fast training times for complex robotics tasks on a single GPU with 1-2 orders of magnitude improvements compared to conventional RL training that uses a CPU based simulator and GPU for neural networks.

https://weibo.com/1402400261/Kvbeo0PIc

2、[LG] Do Transformer Modifications Transfer Across Implementations and Applications?

S Narang, H W Chung, Y Tay, W Fedus, T Fevry, M Matena, K Malkan, N Fiedel, N Shazeer, Z Lan, Y Zhou, W Li, N Ding, J Marcus, A Roberts, C Raffel

[Google Research]

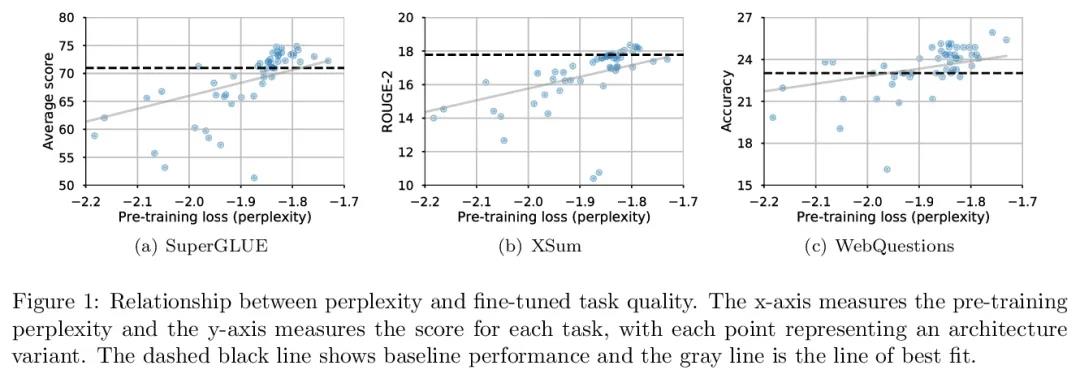

Transformer的修改能否在不同的实现和应用间迁移?自Transformer架构在三年前推出以来,研究界已经提出了大量的修改意见,但其中相对较少的修改意见得到了广泛的采用。本文在一个涵盖自然语言处理中Transformer大多数常见用途的共享实验环境中,全面评估了这些修改。令人惊讶的是,大多数修改都不能有意义地提高性能。大多数Transformer变体都是基于同一套代码开发的,或者只有相对较小的改动。猜测性能的提高可能在很大程度上取决于实现细节,相应地提出一些建议,以提高实验结果的通用性。

The research community has proposed copious modifications to the Transformer architecture since it was introduced over three years ago, relatively few of which have seen widespread adoption. In this paper, we comprehensively evaluate many of these modifications in a shared experimental setting that covers most of the common uses of the Transformer in natural language processing. Surprisingly, we find that most modifications do not meaningfully improve performance. Furthermore, most of the Transformer variants we found beneficial were either developed in the same codebase that we used or are relatively minor changes. We conjecture that performance improvements may strongly depend on implementation details and correspondingly make some recommendations for improving the generality of experimental results.

https://weibo.com/1402400261/KvbiV0viK

3、[CV] DROID-SLAM: Deep Visual SLAM for Monocular, Stereo, and RGB-D Cameras

Z Teed, J Deng

[Princeton University]

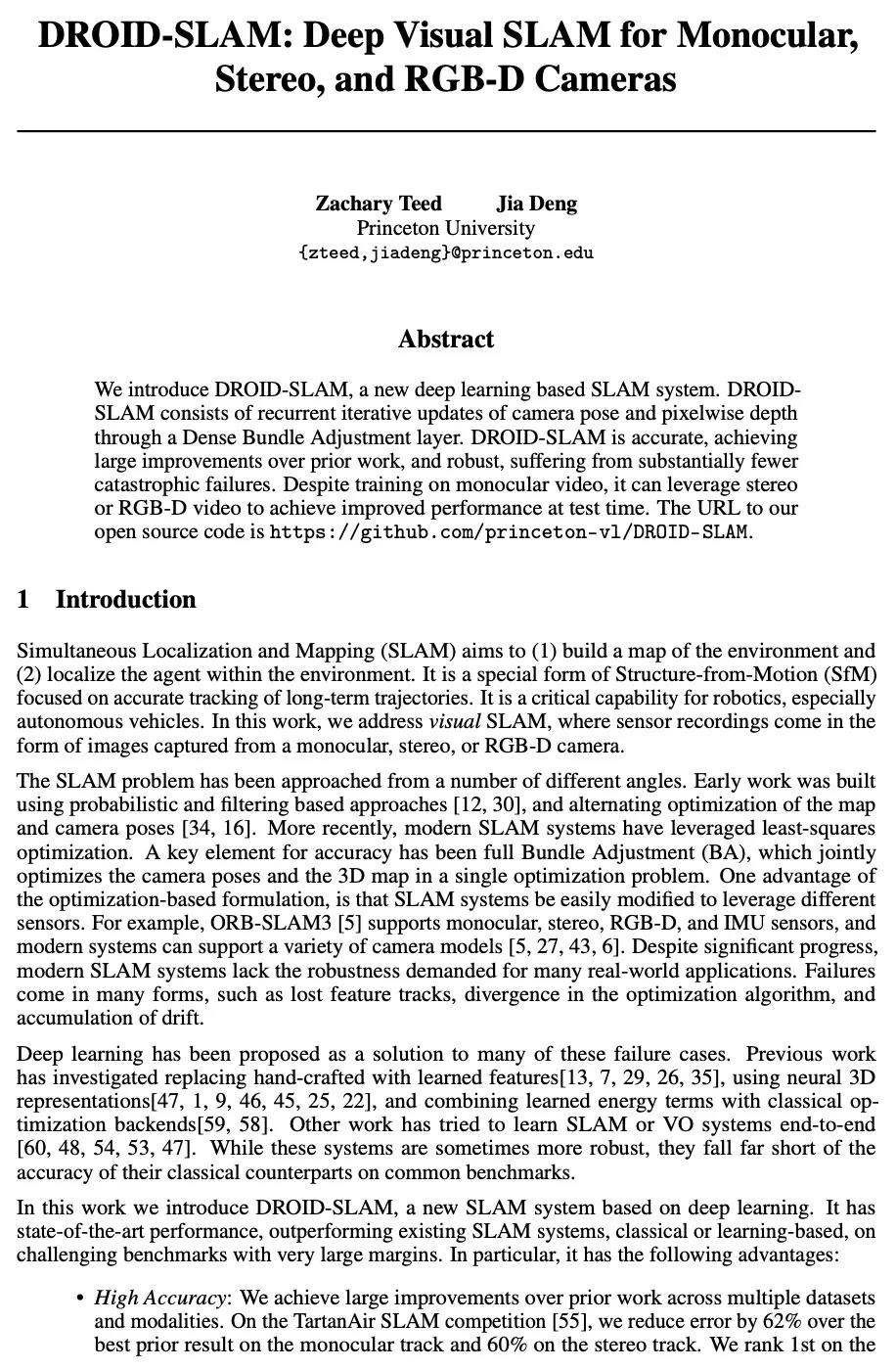

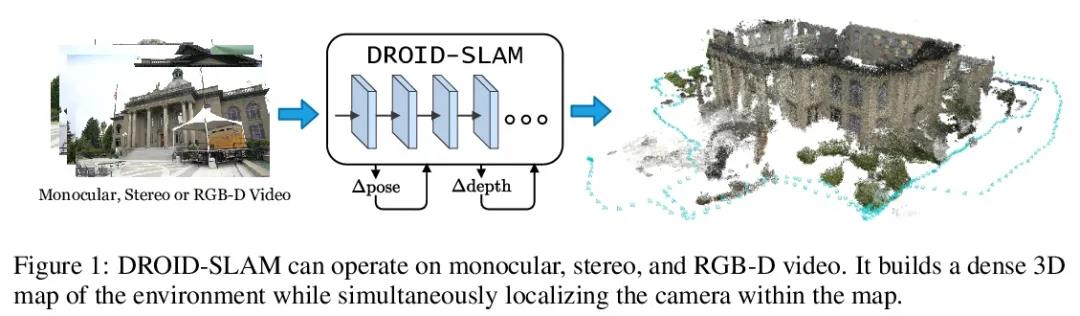

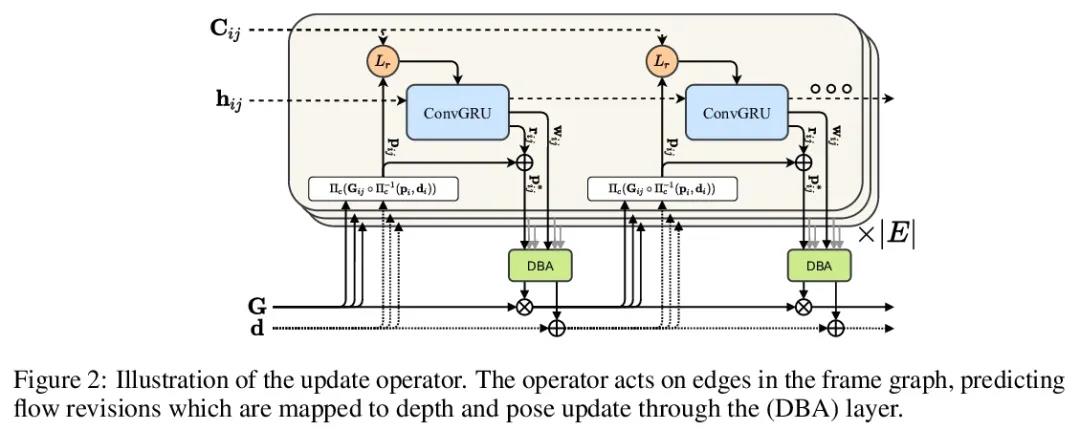

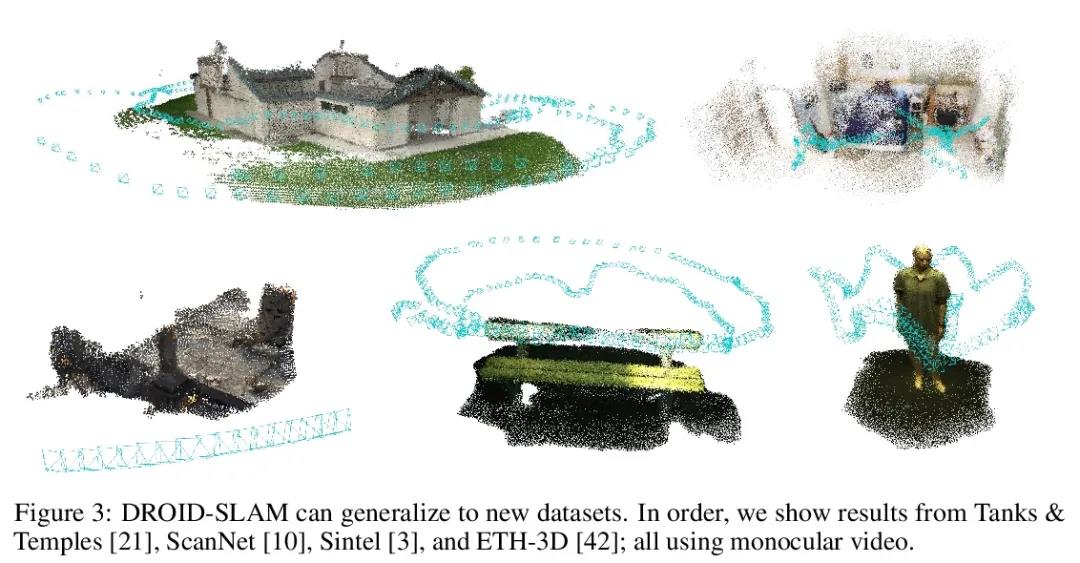

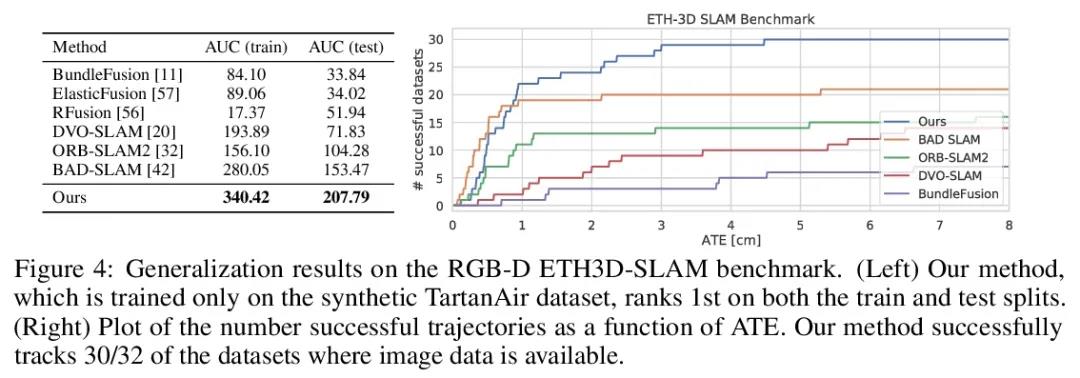

DROID-SLAM:单目、立体和RGB-D相机的深度视觉SLAM。本文提出DROID-SLAM,一个新的基于深度学习的SLAM系统。DROID-SLAM包括通过密集束调整层对相机姿态和逐像素深度的反复更新。DROID-SLAM是准确的,比之前的工作有很大改进,而且是鲁棒的,灾难性故障大大减少。尽管在单目视频上进行训练,但可以利用立体或RGB-D视频来实现测试时性能改进。在具有挑战性的基准上,它以较大的幅度超越了先前的工作。

We introduce DROID-SLAM, a new deep learning based SLAM system. DROIDSLAM consists of recurrent iterative updates of camera pose and pixelwise depth through a Dense Bundle Adjustment layer. DROID-SLAM is accurate, achieving large improvements over prior work, and robust, suffering from substantially fewer catastrophic failures. Despite training on monocular video, it can leverage stereo or RGB-D video to achieve improved performance at test time.

https://weibo.com/1402400261/KvblT4R9T

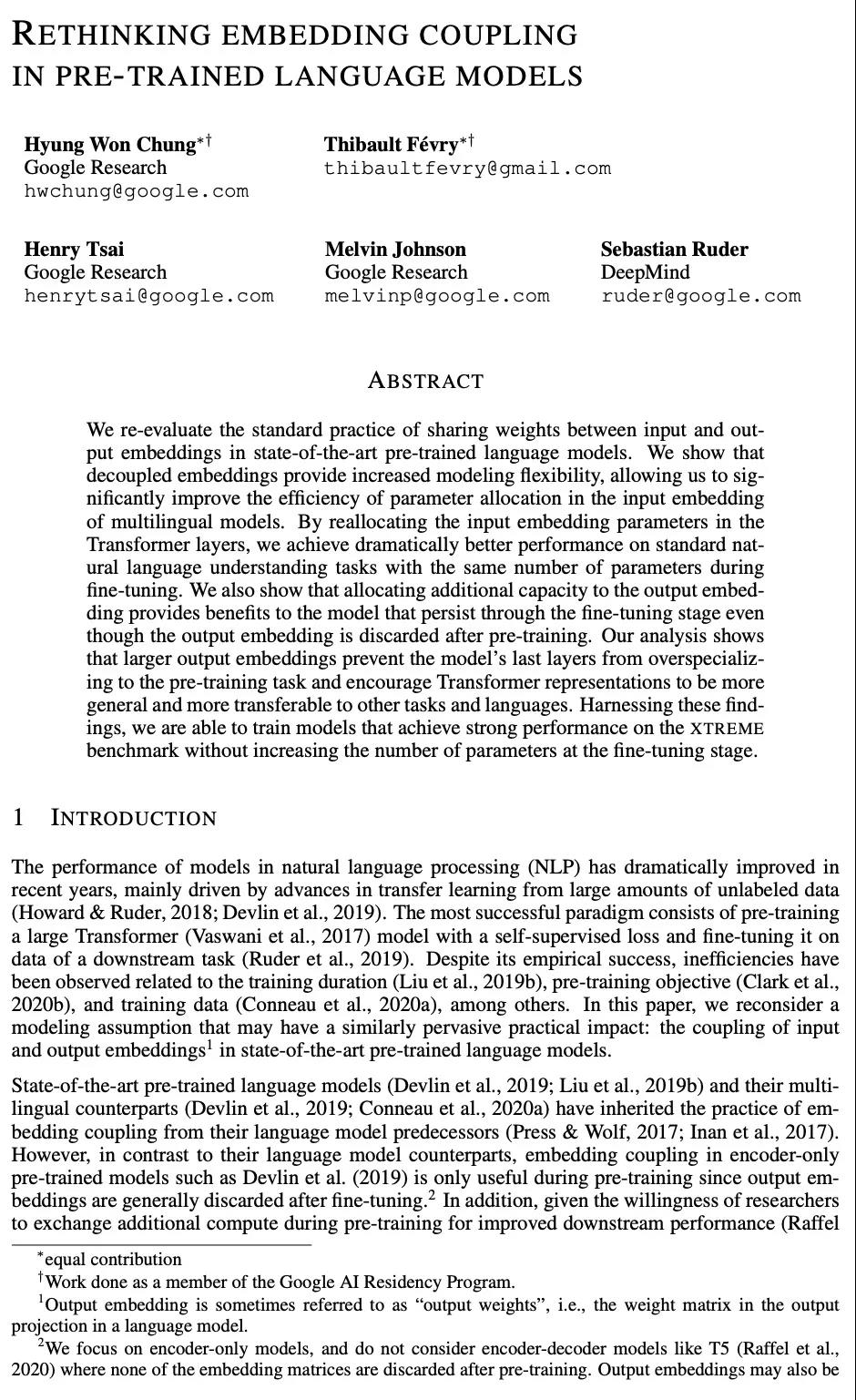

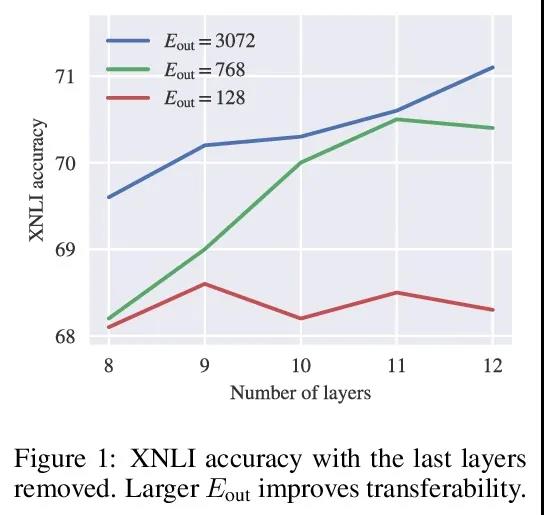

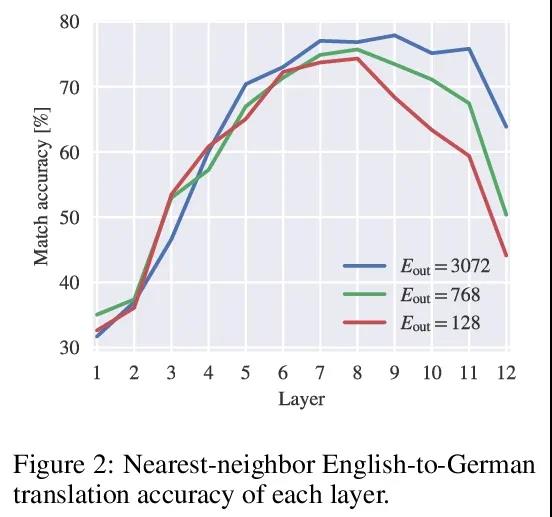

4、[CL] Rethinking embedding coupling in pre-trained language models

H W Chung, T Févry, H Tsai, M Johnson, S Ruder

[Google Research & DeepMind]

预训练语言模型嵌入耦合的重新思考。本文重新评估了最先进的预训练语言模型中输入和输出嵌入间共享权重的标准做法。解耦嵌入提供了更大的建模灵活性,能够显著提高多语言模型输入嵌入中的参数分配效率。通过再分配Transformer层中的输入嵌入参数,微调时用相同数量参数在标准自然语言理解任务上取得了显著的性能提升。为输出嵌入分配额外的容量为模型带来了好处,这些好处在微调阶段一直存在,即使输出嵌入在预训练后就丢弃了。分析表明,较大的输出嵌入可以防止模型最后几层对预训练任务的过特定化,并鼓励Transformer表示更加通用,更好迁移到其他任务和语言。利用这些发现,可训练出在XTREME基准上取得强大性能的模型,而无需在微调阶段增加参数数量。

We re-evaluate the standard practice of sharing weights between input and output embeddings in state-of-the-art pre-trained language models. We show that decoupled embeddings provide increased modeling flexibility, allowing us to significantly improve the efficiency of parameter allocation in the input embedding of multilingual models. By reallocating the input embedding parameters in the Transformer layers, we achieve dramatically better performance on standard natural language understanding tasks with the same number of parameters during fine-tuning. We also show that allocating additional capacity to the output embedding provides benefits to the model that persist through the fine-tuning stage even though the output embedding is discarded after pre-training. Our analysis shows that larger output embeddings prevent the model’s last layers from overspecializing to the pre-training task and encourage Transformer representations to be more general and more transferable to other tasks and languages. Harnessing these findings, we are able to train models that achieve strong performance on the XTREME benchmark without increasing the number of parameters at the fine-tuning stage.

https://weibo.com/1402400261/Kvbqt0Kde

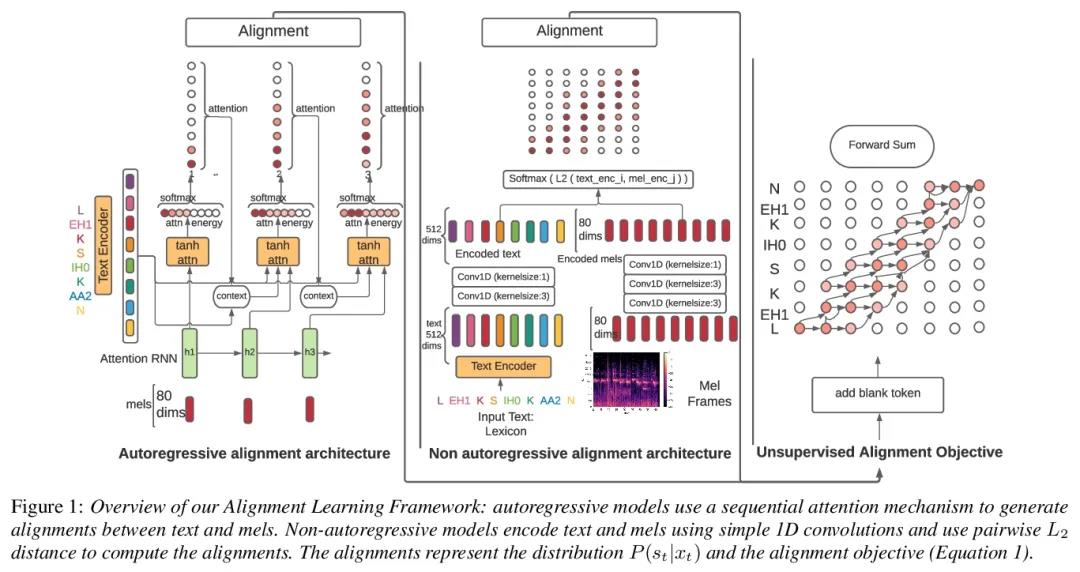

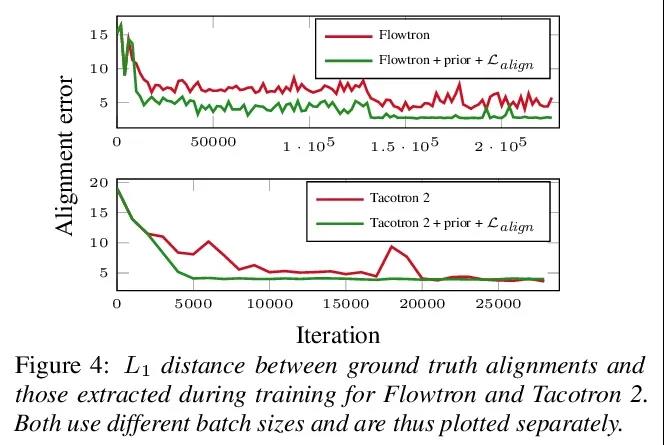

5、[AS] One TTS Alignment To Rule Them All

R Badlani, A Łancucki, K J. Shih, R Valle, W Ping, B Catanzaro

[NVIDIA]

用一种对齐方式解决所有TTS架构对齐问题。语音到文本对齐是神经网络文本到语音(TTS)模型的一个关键组成部分。自回归TTS模型通常用注意力机制来在线学习这些排列组合。然而,这些对齐方式往往很脆弱,往往不能推广到长语篇和域外文本,导致词缺失或重复。大多数非自回归端到端TTS模型依赖于从外部源提取的时长。本文用RAD-TTS中提出的对齐机制作为通用的对齐学习框架,很容易适用于各种神经TTS模型。该框架结合了前向求和算法、Viterbi算法和一种简单有效的静态先验。实验表明,对齐学习框架改善了所有测试的TTS架构,包括自回归(Flowtron, Tacotron 2)和非自回归(FastPitch, FastSpeech 2, RAD-TTS)。所提出方法改善了现有的基于注意力的机制的对齐收敛速度,简化了训练管线,使模型对长语料的错误更加稳定。最重要的是,该框架提高了人工评价者所评判的感知语音合成质量。

Speech-to-text alignment is a critical component of neural textto-speech (TTS) models. Autoregressive TTS models typically use an attention mechanism to learn these alignments on-line. However, these alignments tend to be brittle and often fail to generalize to long utterances and out-of-domain text, leading to missing or repeating words. Most non-autoregressive endto-end TTS models rely on durations extracted from external sources. In this paper we leverage the alignment mechanism proposed in RAD-TTS as a generic alignment learning framework, easily applicable to a variety of neural TTS models. The framework combines forward-sum algorithm, the Viterbi algorithm, and a simple and efficient static prior. In our experiments, the alignment learning framework improves all tested TTS architectures, both autoregressive (Flowtron, Tacotron 2) and non-autoregressive (FastPitch, FastSpeech 2, RAD-TTS). Specifically, it improves alignment convergence speed of existing attention-based mechanisms, simplifies the training pipeline, and makes the models more robust to errors on long utterances. Most importantly, the framework improves the perceived speech synthesis quality, as judged by human evaluators.

https://weibo.com/1402400261/Kvbxnf9fA

另外几篇值得关注的论文:

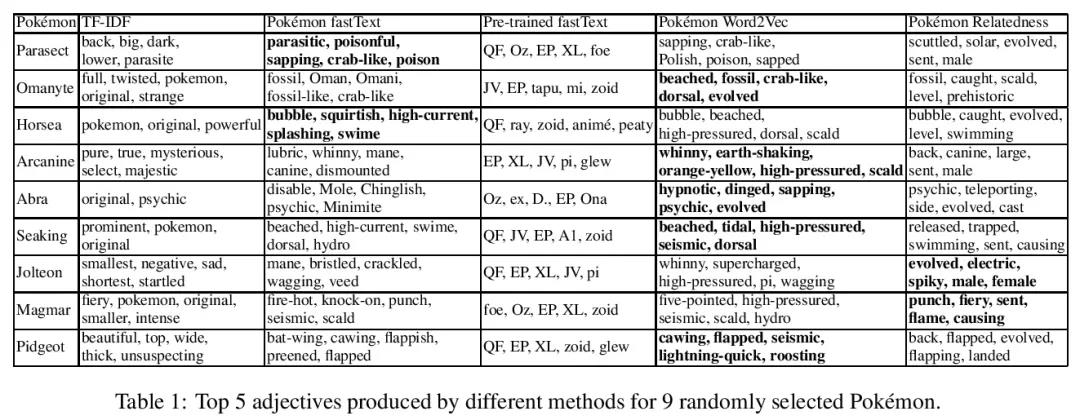

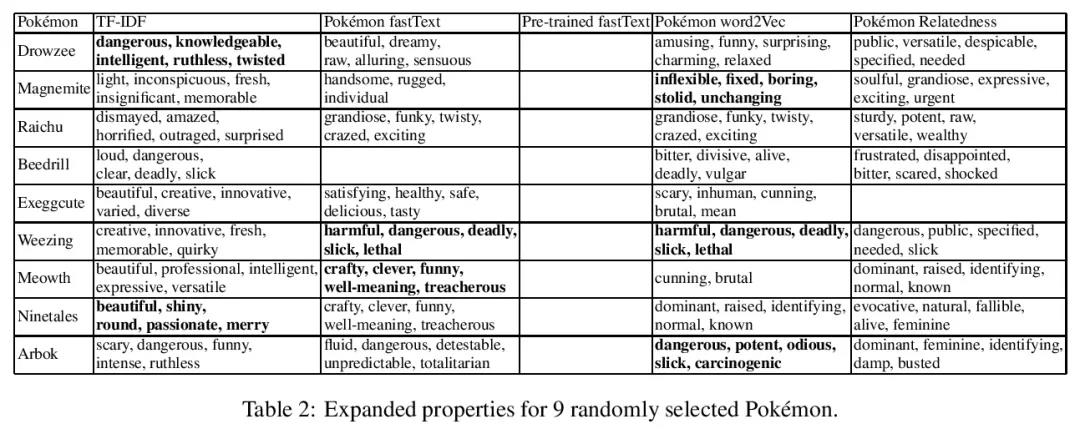

[CL] How Cute is Pikachu? Gathering and Ranking Pokémon Properties from Data with Pokémon Word Embeddings

皮卡丘有多可爱?基于神奇宝贝词嵌入从数据中收集和排序神奇宝贝属性

M Hämäläinen, K Alnajjar, N Partanen

[University of Helsinki]

https://weibo.com/1402400261/KvbubxXqy

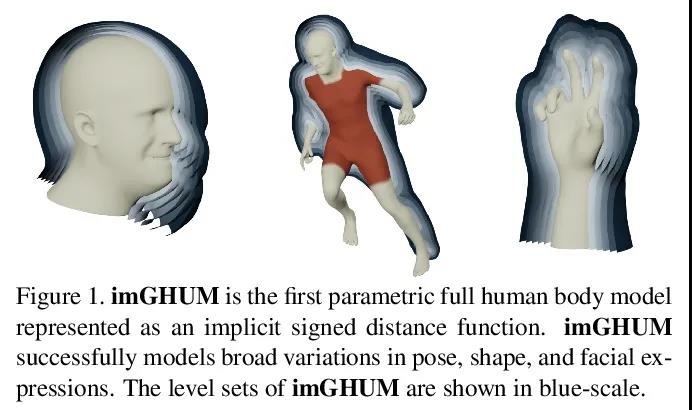

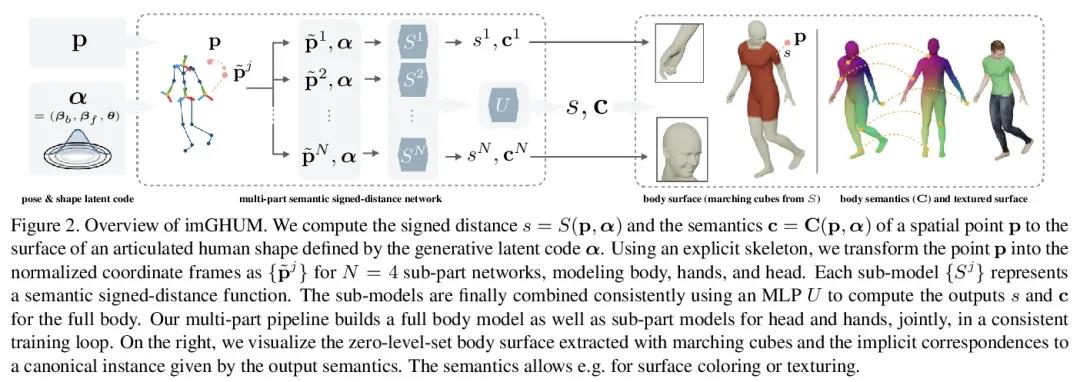

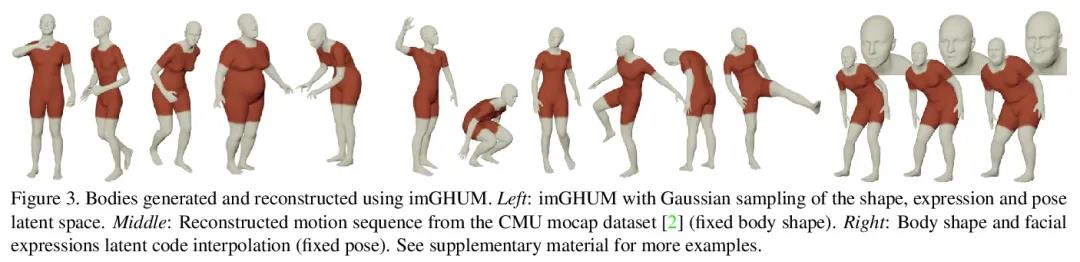

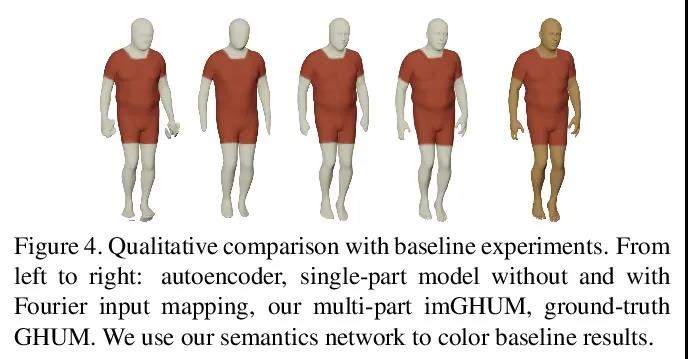

[CV] imGHUM: Implicit Generative Models of 3D Human Shape and Articulated Pose

imGHUM:3D人体形状和关节姿态的隐生成模型

T Alldieck, H Xu, C Sminchisescu

[Google Research]

https://weibo.com/1402400261/KvbCsqVri

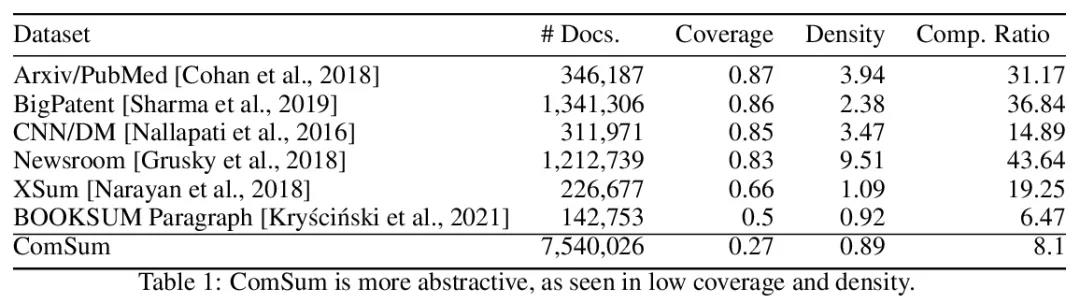

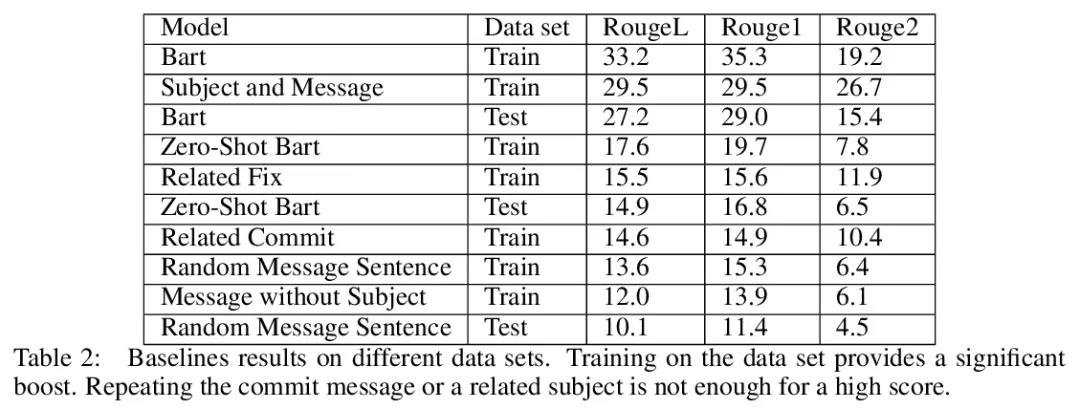

[CL] ComSum: Commit Messages Summarization and Meaning Preservation

ComSum:代码提交信息文本摘要数据集

L Choshen, I Amit

[Hebrew University of Jerusalem]

https://weibo.com/1402400261/KvbF5h4DZ

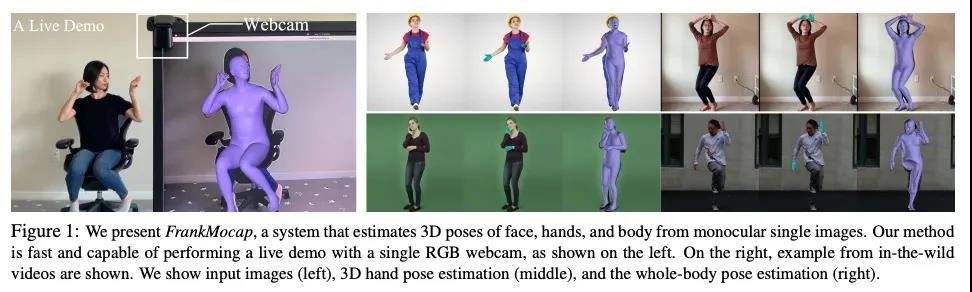

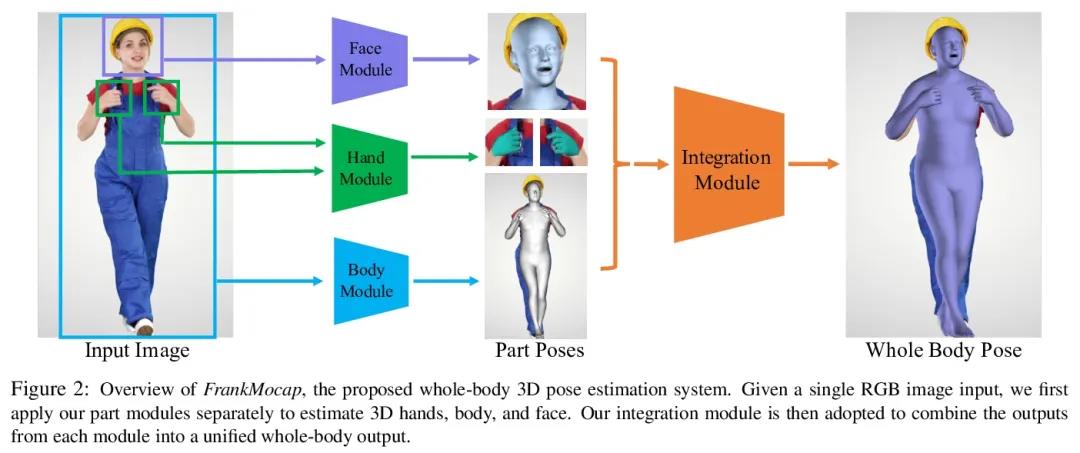

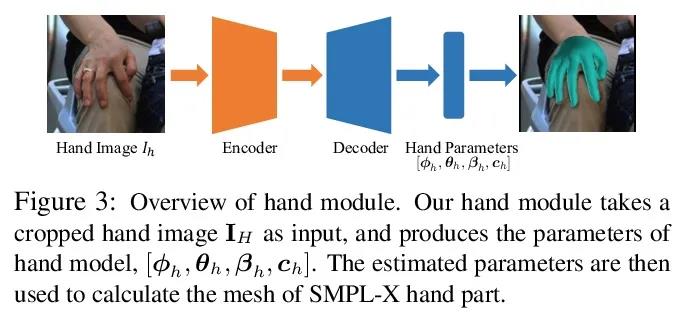

[CV] FrankMocap: A Monocular 3D Whole-Body Pose Estimation System via Regression and Integration

FrankMocap:基于回归整合的单目3D全身姿态估计系统

Y Rong, T Shiratori, H Joo

[The Chinese University of Hong Kong & Facebook Reality Labs & Facebook AI Research]

https://weibo.com/1402400261/KvbHx90aT

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢