[CV] What do Vision Transformers Learn? A Visual Exploration

A Ghiasi, H Kazemi, E Borgnia, S Reich, M Shu, M Goldblum, A G Wilson, T Goldstein

[University of Maryland & New York University]

视觉Transformer学到了什么? 一种可视化探索

要点:

-

对ViT进行可视化分析,以了解其工作原理和学习内容; -

语言模型监督下训练的ViT神经元被语义概念而不是视觉特征激活; -

Transformer检测图像背景特征,相比CNN更少依赖于高频信息; -

Transformer和CNN在浅层学习纹理属性,在深层学习高级对象特征或抽象概念。

摘要:

视觉transformer(ViT)已经迅速成为计算机视觉的事实架构,然而对其工作原因和学习内容了解甚少。虽然现有的研究对卷积神经网络的机制进行了可视化分析,但对ViT进行类似的探索仍然具有挑战性。本文首先解决了对ViT进行可视化分析的障碍。在这些解决方案的协助下,观察到在语言模型监督下训练的ViT(例如CLIP)的神经元被语义概念而不是视觉特征激活。本文还探索了ViT和CNN之间的基本差异,发现transformer检测图像背景特征,像卷积一样,但其预测对高频信息的依赖要少得多。另一方面,两种架构类型在特征从浅层的抽象模式发展到深层的具体对象的方式上表现相似。ViTs在除最后一层外的所有层中都保持着空间信息。本文表明最后一层最可能丢弃空间信息,并表现为一个学习的全局池化操作。在广泛的ViT变体上进行了大规模的可视化,包括DeiT, CoaT, ConViT, PiT, Swin和Twin,以验证所提出方法的有效性。

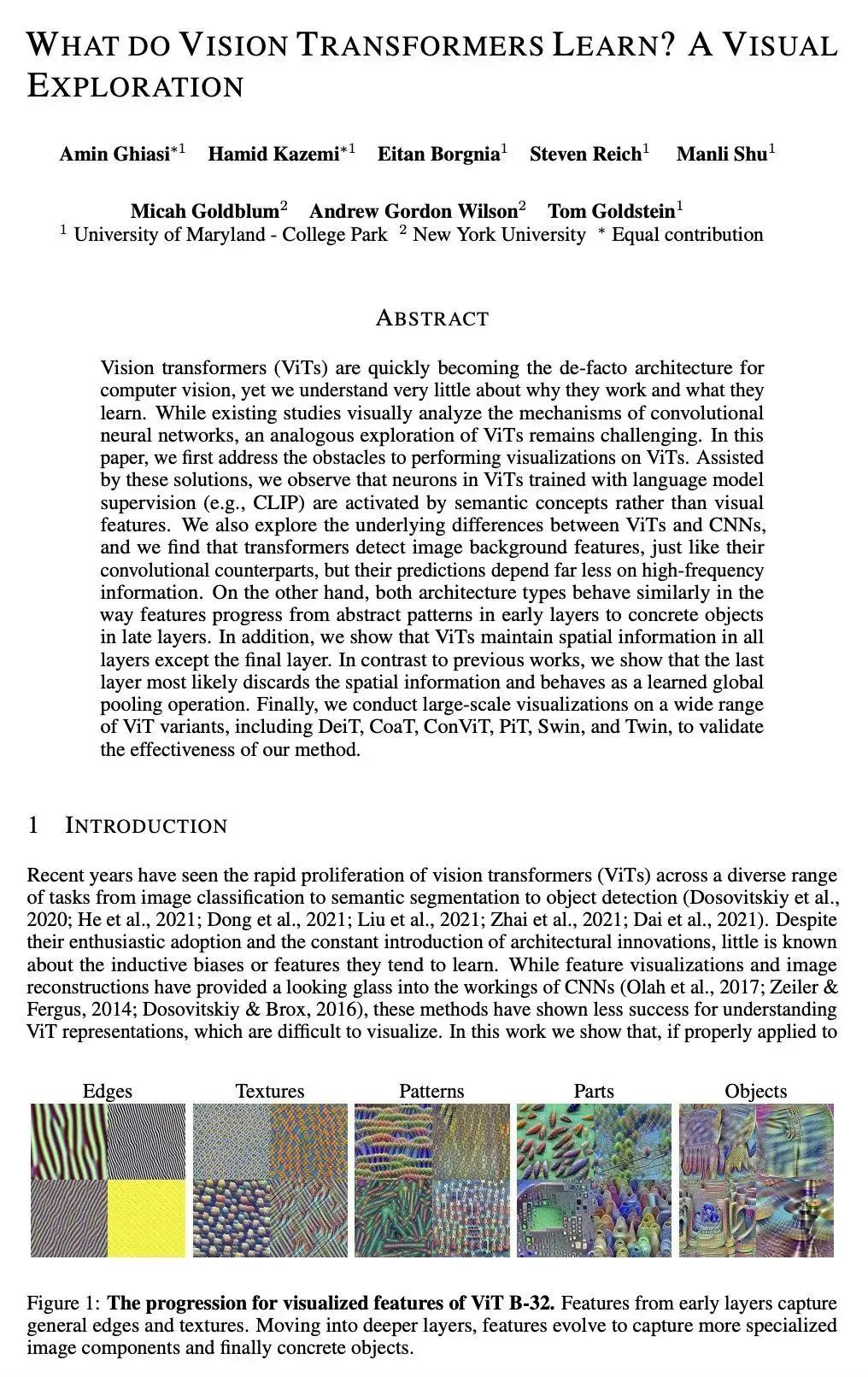

Vision transformers (ViTs) are quickly becoming the de-facto architecture for computer vision, yet we understand very little about why they work and what they learn. While existing studies visually analyze the mechanisms of convolutional neural networks, an analogous exploration of ViTs remains challenging. In this paper, we first address the obstacles to performing visualizations on ViTs. Assisted by these solutions, we observe that neurons in ViTs trained with language model supervision (e.g., CLIP) are activated by semantic concepts rather than visual features. We also explore the underlying differences between ViTs and CNNs, and we find that transformers detect image background features, just like their convolutional counterparts, but their predictions depend far less on high-frequency information. On the other hand, both architecture types behave similarly in the way features progress from abstract patterns in early layers to concrete objects in late layers. In addition, we show that ViTs maintain spatial information in all layers except the final layer. In contrast to previous works, we show that the last layer most likely discards the spatial information and behaves as a learned global pooling operation. Finally, we conduct large-scale visualizations on a wide range of ViT variants, including DeiT, CoaT, ConViT, PiT, Swin, and Twin, to validate the effectiveness of our method.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢