来自今天的爱可可AI前沿推介

[LG] Diffusion Art or Digital Forgery? Investigating Data Replication in Diffusion Models

G Somepalli, V Singla, M Goldblum, J Geiping, T Goldstein

[University of Maryland & New York University]

扩散艺术还是数字剽窃?扩散模型数据复制研究

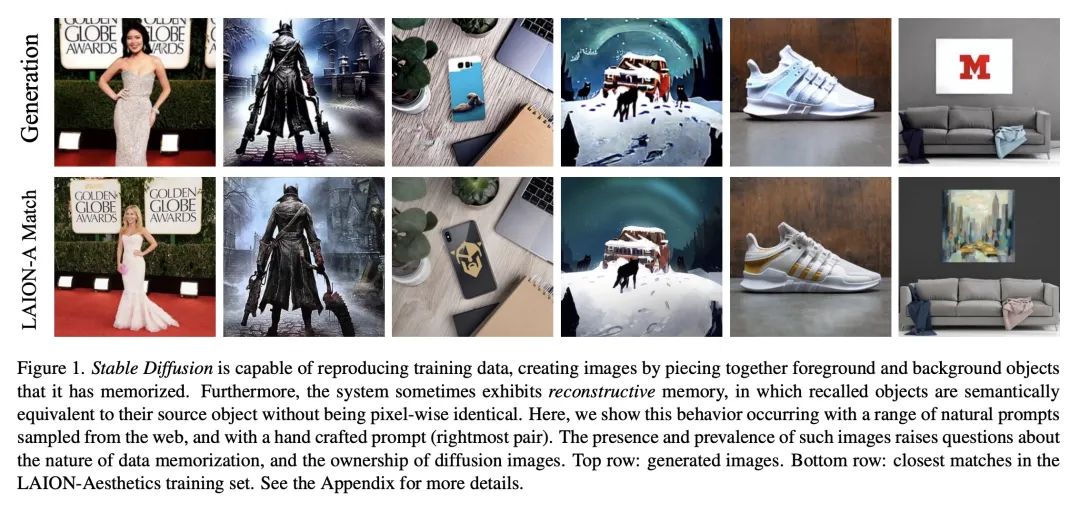

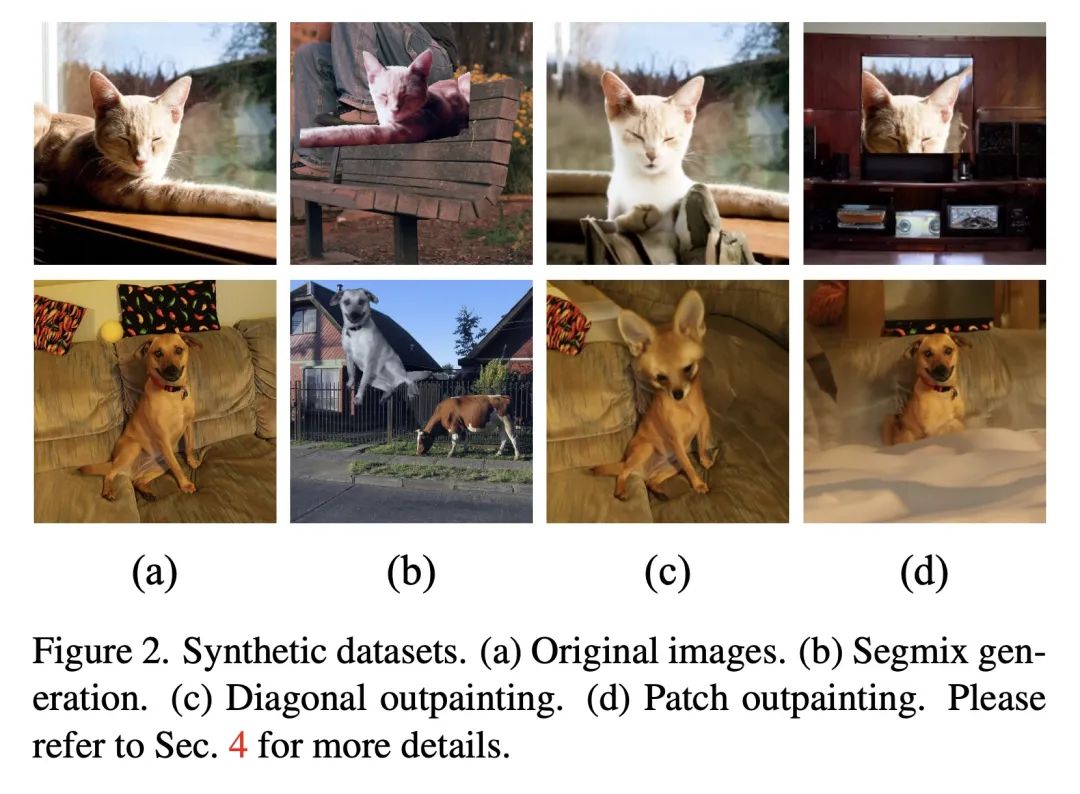

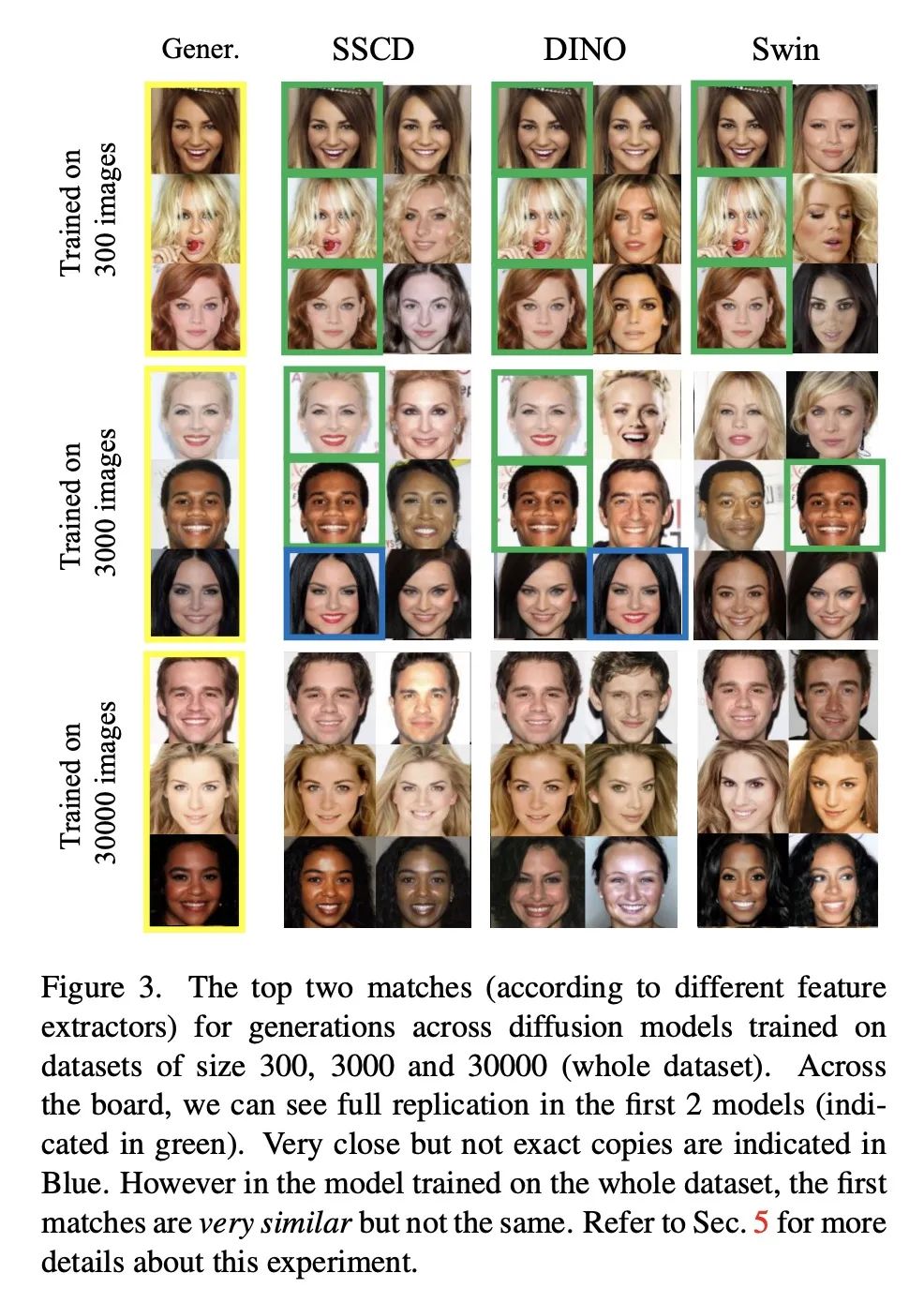

简介:本文研究旨在评估扩散模型是否能从其训练数据中复制内容。将图像检索框架应用于众多数据集,发现模型能复制LAION数据集的审美分割之外的图像,可能存在比所采用的检索方法可测量的更大量的复制。

摘要:最新的扩散模型产生的图像具有高质量和可定制性,它们能用于商业艺术和平面设计的目的。但是,扩散模型是创造了独特的艺术作品,还是直接从其训练集中剽窃内容?本文研究了图像检索框架,使得能比较生成的图像和训练样本,并检测内容是否被复制。将该框架应用于在多个数据集上训练的扩散模型,包括Oxford flowers、Celeb-A、ImageNet和LAION,讨论了诸如训练集大小等因素如何影响内容复制率。本文还确定了包括流行的Stable Diffusion模型在内的扩散模型公然复制其训练数据的情况。

地址:https://arxiv.org/abs/2212.03860

Cutting-edge diffusion models produce images with high quality and customizability, enabling them to be used for commercial art and graphic design purposes. But do diffusion models create unique works of art, or are they stealing content directly from their training sets? In this work, we study image retrieval frameworks that enable us to compare generated images with training samples and detect when content has been replicated. Applying our frameworks to diffusion models trained on multiple datasets including Oxford flowers, Celeb-A, ImageNet, and LAION, we discuss how factors such as training set size impact rates of content replication. We also identify cases where diffusion models, including the popular Stable Diffusion model, blatantly copy from their training data.

更多请参考

更多请参考

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢